What Is AWS S3?

Amazon’s AWS S3 is a versatile, economical, and safe way of storing data objects in the cloud. The name stands for “Simple Storage Service,” and it provides a simple organization for storing and retrieving information. Unlike a database, it doesn’t do anything fancy. It does one thing: letting you store as much data as you want. Its data is stored redundantly across multiple sites. That makes the chances of data loss or downtime tiny, far lower than they would be if you used on-premises hardware. It has good security, with options to make it still stronger.

S3 vs. other services

S3 isn’t a database, in the sense of a service with a query language for adding and extracting data fields. If that’s what you want, you should look at Amazon’s RDS. With RDS, you can choose from several different SQL engines. Alternatively, you can host a database on your own servers, with all the responsibility that entails. S3 is more economical than RDS if you don’t need all the features of a database.

S3 also isn’t a full-blown file system. It consists of buckets which hold objects, but you can’t nest them inside other buckets. For a general-purpose, hierarchical file system, you should look at Amazon’s EFS or set up a virtual machine and use its file directories. If you set up a cloud VM using a service like EC2, you pay for storage as part of the VM’s ongoing costs.

AWS S3 is optimized for “write once, read many” operation. When you update an object, you replace the whole object. If your data requires constant modifications, it’s better to use RDS, EFS, or the local file system of a VM.

The basics of S3

The organization of information in S3 is very simple. Information consists of objects, which are stored in buckets. A bucket belongs to one account. An object is just a bunch of data plus some metadata describing it. Metadata are key-value pairs. S3 works with the metadata, but the object data is just a collection of bytes as far as it’s concerned.

You can save multiple versions of an object, letting you go back to an earlier version if you change or delete something by mistake. Every object has a key and a version number to identify it uniquely across all of S3.

You can specify the geographic region a bucket is stored in. That lets you keep latency down, and it may help to meet regulatory requirements.

Normally S3 reads or writes whole objects, but S3 Select allows retrieving just part of an object. This is a new feature available to all customers.

Uses for S3

Wherever an application calls for retrieving moderate to large units of data that don’t change often, S3 can be a great choice.

- Backup: S3 can hold a backup copy of a website, a database, or a whole disk. With very high durability, it gives confidence your data won’t be lost.

- Disaster recovery: A complete, up-to-date disk image can be stored on S3. If a disaster makes a primary server unavailable, the saved image is available to launch another server and keep business operations going.

- Application data: S3 can hold large amounts of data for use by a web or mobile application. For instance, it could hold images of all the products a business sells or geographic data about its locations.

- Website data: S3 can host a complete static website (one which doesn’t require running any code on the server). To set it up, you tell S3 to configure a bucket as a website endpoint.

Access control and security

Buckets and objects are secure by default, and you can make them more secure by applying the right options. You have control over how they’re shared, and you can encrypt the data.

The system of bucket policies gives you detailed control over access. You can limit access by account, IP address, or membership in an access group. Multi-factor authentication can be mandated. Read access can be open to everyone while write access is restricted to just a few users. If you prefer, you can use AWS IAM to manage access.

For additional protection of data, you can use server-side or client-side encryption. That way, even if someone steals a password and gets access to your objects, they won’t be able to do anything with them.

Pricing

The cost of S3 storage depends on how much you use, and it varies by region. New AWS customers can use the Free Tier to get 5 GB of storage, 20,000 get requests, 2,000 put requests and up to 15 GB of data retrieval. The Free Tier is available for one year.

In the United States, the first 50 terabytes of S3 standard storage are available for $0.023 to $0.026 per GB per month, depending on the region. The price per GB drops slightly for higher usage levels. Taxes are additional.

There is a cost for reading and writing data in addition to the storage cost. In accordance with the “write once, read many” philosophy, requests that retrieve data are much less expensive than ones that write it. Retrieving costs just $0.0004 per thousand requests in most of the US, while writing data costs $0.005 per thousand requests. An infrequent access option is available, which costs less for storage but more for access.

Getting started

If you have an AWS account, setting up S3 usage is straightforward. From the console, select the S3 service. You’ll be given the option to create a new bucket. You need to give it a unique name and select a region. There are a number of options you can then choose, including logging and versioning. Next, you can give permission to other accounts to access the bucket. The console will let your review your settings, after which you confirm the creation of the bucket.

Next, you can upload objects to the bucket and set permissions and properties for them. If you’re using S3 through other AWS services, you may never need to upload directly. You’ll still want to check the S3 console occasionally to verify that your usage and costs are in the range you expected and that bucket authorizations are what they should be.

When deciding whether S3 is the best way to handle the storage for your application, evaluate how it stacks up against your needs. If you don’t require a full file system and you don’t need to rewrite data often, S3 can be a very cost-effective choice. It provides high data availability and security at a very reasonable price.

AWS Lambda vs. Elastic Beanstalk

You’re contemplating a software migration from your own servers to cloud hosting. There are many ways to do it, with varying options. This article looks at two of them from Amazon Web Services: Lambda and Elastic Beanstalk. Both are great choices, but they serve different purposes. Lambda is simpler and less expensive, while Elastic Beanstalk lets you run full applications and gives you control over their environment. Understanding each one’s strengths will let you make an informed choice between these AWS services.Read More

Designing an Application for the Cloud | A Case Study

Case Study Overview

Our team designed a cloud-based solution using AWS Lambda, AWS S3, Docker Containers on AWS Fargate, AWS Step Functions, AWS API Gateway, AWS RDS, and Slack integration in order to allow for intermittent, heavy compute by a company analyzing data collected via drone for the agriculture industry.

Project Background

Our team recently put together a design to solve a pretty interesting problem that an innovative business sent our way.

This firm specializes in analyzing data collected by remote sensors and drawing conclusions from it. They primarily serve the agriculture sector.

An example of the conclusions they can draw using their proprietary algorithms is an index of plant growth on a farm. Using sensor data, they do image analysis to come up with a measure of how crop growth is coming along during the growing season.

They have a number of such algorithms that they have developed in-house. When they develop an algorithm, they first do it on their own computers. Then, when they feel it is ready for a test run, they publish it to shared resources and run it with client data. It is this process they were looking to automate.

They did not have an easy way to publish their algorithms, upload client data, and see the results. They wanted to be able to do this regardless of whether they were on or off network. Additionally, they wanted to spend as little time and money as possible on buying hardware, installing and patching operating systems, and maintaining servers. Finally, they expected that their algorithms would take a lot of computing power to run, but they didn’t run them all that often. This all pointed to a design using cloud services.

Design

AWS is our cloud service provider of choice since they hold the market lead in the space and their services are varied, reliable, and work well together. Since our client needed intense but intermittent computing power, we decided AWS Lambda and containerization would be at the center of our design. If you need a more thorough explainer of what AWS Lambda is and the purpose it serves, check out our earlier blog “What is AWS Lambda?“.

The design, pictured below, involves AWS Lambda as the glue that looks for new data, executes a containerized algorithm, and distributes the results to a couple of other services.

The diagram above was generated using Cloudcraft, our go-to tool for visualizing cloud architecture built around AWS.

Use of the system goes like this:

- The data scientist for the drone company publishes his or her algorithm inside a docker container hosted on AWS Fargate, shown as “C4” on the diagram

- They then dump client data into an S3 bucket, which triggers an AWS Lambda job that is monitoring the bucket for changes.

- This feeds into AWS Step Functions that execute the algorithm’s steps, outputting data after each portion of the algorithm (this was a client requirement; the algorithms generate intermediate states and the data from those states need to be recorded).

- The data is outputted to a destination S3 bucket, a Postgres database, an API for extensibility, and a Slack channel to keep the team up to date on the state of the jobs as they are running.

There are several advantages of this design:

- Assuming that the S3 buckets are cleared out, this system incurs very little cost except when it is running. The AWS RDS PostgreSQL database will cost money, assuming you exceed the free tier, but the cost when not running is many times less than you would pay for a virtual machine hosting these services. Amazon provides a pricing calculator for RDS.

- Overall pricing should be much lower than when using a VM. Since price is so low when the system is not running, the overall price will also be lower than the price of a server-oriented architecture, which accrues costs as long as the VM is running. See more details on pricing below.

- Unlike a VM, once these services are connected they should not have to be reworked as long as Amazon supports backwards compatibility between the services. Amazon routinely versions its APIs so that the system should continue to work even as the API evolves. This means for the foreseeable future the thing will run without patching, updating, upgrading, or doing any of the other annoying maintenance tasks that you might be forced to do in a more traditional server-based architecture.

- The services are decoupled and communicate with each other through defined APIs. This means if you wanted to swap out a section of the system with another service, you would be able to do this. For instance, if you wanted to replace AWS Lambda in this diagram with Azure Functions, you would be able to do so with little rework.

Digging Into the Pricing Details

Let’s assume the following parameters for our system:

- 4 data scientists on the team each running a test algorithm every 2 hours every workday of the month

- 60 seconds to run the algorithm

- Consumes 8 GB of memory while running

- 1 GB of data going into and out of the S3 buckets and the RDS instance per run

Our Design:

AWS Lambda provides 400,000 GB-Seconds of compute for free every month, and we fall within that limit, meaning we’ll pay nothing for that. We’ll need about 6 compute hours per month of AWS Fargate, which costs us about $2. The only real significant costs comes from the input/output of S3 and RDS, which totals about $200 per month. Total costs would come in at less than $250/month to host the application.

Alternative Design Using Virtual Machines:

A virtual machine on Amazon EC2 with 4 virtual cores, 16 GB of RAM, and a pre-loaded relational database runs you $3.708 per hour. Note that you need the extra RAM because we’re assuming we need 8GB of compute RAM when running the algorithms, plus we need extra to run the databases and Docker. There are 730 hours in your average month, meaning the virtual machine approach will run you $2,707 per month.

Conclusions

The results are clear. For this instance, where a number of cloud services can be glued together to satisfy an intermittent, heavy compute load, the purely serverless design is superior. It takes less time to maintain, requires no patching, and is about 10x less expensive.

We hope that this example design shows how AWS Services can be stitched together to create flexible, affordable solutions. If you would like to learn more, feel free to contact us.

The Benefits of the Cloud and What Prevents Adoption

Entrance Founder Nate Richards talking about the benefits the cloud can provide to you and what keeps people from adopting the cloud. Transcript:

The cloud itself allows companies to rapidly deploy new technologies inside the company.

It used to be that companies had to go and buy servers, which means they had to figure out the hardware, figure out the connectivity, figure out the backup, figure out the power, figure out the cooling, all the things that go into owning and for the full life cycle of that physical server. All of that adds cost, it adds time, it adds risk to the actual act of introducing new technology to a business.

With the cloud we are able to introduce this new technology overnight. We can turn on and off machines. We can cycle big powerful server loads down at night or even turn them off to save costs and turn them back on before employees come to work. We can turn them off on the weekends. These are the things we are able to do by using the cloud.

The problem with the cloud though, it’s no longer a security concern. Many people feel that the reason businesses are afraid of the cloud is because of security and we can certainly read about security breaches in the paper, or the digital equivalent of that, on blogs today, and we know that servers are often times subject to being hacked.

But the funny thing is usually those hacks are happening with physical servers in people’s environment. Because, in the cloud world, cloud providers would be out of business overnight if they were to get hacked, just one time. And they know a lot more about keeping servers and keeping data secure than usually private business enterprises do.

So then the limit of businesses getting to the cloud is not their fear of security issues, but increasingly it’s a tie to legacy applications that were not written for the cloud first, mobile first world. But instead, are tailored to be run on servers running inside that company’s network infrastructure.

Businesses usually get stuck there because they don’t know how to make the leap in to the cloud. Can some of those applications come forward with them on this transition? When a vendor says something is cloud ready or cloud friendly, what do they really mean by that? Sorting out these claims from reality often times is hard for our clients and that’s usually where we get brought in, to help them take these steps from the paper world, from the physical world, from the server world into the digital, mobile, cloud world where we can introduce change into the business overnight.

AWS Lambda and the Hybrid Cloud | Get Your App Off the Ground

This article will detail how to use the hybrid cloud and AWS services to get a custom app off the ground and quickly and inexpensively.

You’ve got an app that’s so badly outdated it looks like it was cobbled together from tree branches and the hide of a mastodon during the Internet’s Stone Age. You want to bring that ancient app into the 21st Century by transforming it into something sleek and super-desirable.

So you tell your app design team to put on their thinking caps and stick their collective head in the clouds—that glittery dream-zone inside their brains where their creativity can merrily romp on the backs of unbridled unicorns.

But in addition to having their heads in the clouds, they also need to have to their feet firmly planted on the ground.

Thanks to AWS Lambda (along with all the other vital AWS Services from Amazon), your app designers can be fully grounded in the realities of app creation.

In particular, AWS Lambda and AWS Services make it possible for your app designers to write and test code on the cheap.

As a result, they can put together an app concept, give it a mirco-scale try out, tweak the daylights out of the thing until it finally works exactly the way you want, and then make it available to your users after having spent practically nothing shepherding it through the proof-of-concept process.

Hybrid Cloud has Its Virtues

How does AWS Lambda make this possible? Before we answer that question, you should take a look at this cool AWS Lambda explainer article of ours for some essential background.

Basically, though, AWS Lambda and AWS Services help you get your app’s feet off the ground by putting its designer’s head in a different type of cloud—the hybrid cloud, to be exact.

You’re probably already dialed into what the hybrid cloud is. In case you’re not, it’s a virtual environment created by harnessing your physical workstations to a bunch of remote servers that will do the heavy lifting of building out and proving up your app prototypes.

Not to put too fine a point on it, but experts don’t fully agree on the definition of a hybrid cloud. One useful definition comes courtesy of Cloudnexion, which contends you’re talking hybrid cloud if these elements are present: an onsite infrastructure owned by you and connected to cloud resources; a co-location infrastructure connected to the cloud; a managed co-location/hosting infrastructure connected to the cloud; and cloud resources connected to other cloud resources.

However you care to define hybrid cloud, all you really need to know and appreciate is that AWS Lambda and AWS Services put you in it and make your life better when they do. They are the ideal tools for building and testing your apps.

AWS Lambda and AWS Services Save Money

The benefit of using AWS Lambda and AWS Services in the hybrid cloud for app development is you won’t face serious economic consequences for allowing your design team’s imagination to run riot during the entirety of the proof-of-concept phase.

When you use this development scheme, you can expect significant savings. Those savings, in turn, encourage you to innovate more. The idea here is the more you save, the more capital you have available for app R&D (unless, of course, you’re the greedy type who’d rather pocket all that extra coin and blow it on good times in Vegas or iced-out jewelry from HipHopBling’s).

The reason you save money by assembling and testing your app prototypes in a hybrid cloud environment is that you only pay for the resources you use. AWS Lambda and the other AWS Services make sure that’s the case for you.

Faster Build-Out

AWS Lambda lets you build apps faster thanks to the way it reduces the amount of code you need to write.

Call that the economy of coding. Or call it economical coding. Doesn’t matter. The fact is, you save time and effort (and, by extension, money) when you write fewer lines—possible because AWS Lambda enables your use of native services like API Gateway, DynamoDB, SNS, SQS, SES, StepFunctions, Kinesis, Cognito, and more.

There are still other benefits to be gained from using AWS Lambda and AWS Services to build and prove-out your apps.

For starters, Amazon contends that your use of AWS Lambda and AWS Services typically results in the creation of higher-quality apps. For that, you can thank at least in part Amazon’s S3 and Cloudfront content distribution network services (which AWS Lambda enables you to tap into for web app development hosting) and the AWS Device Farm (which permits cloud-integrated tests involving a sizable cohort of real—not virtual or simulated—iOS and Android phones).

Perhaps your curiosity is piqued as to which programming languages and frameworks the AWS collection supports. If so, you’ll be happy to know AWS Lambda and all AWS Services fully support Node, Python 2.7 and 3, Java 8, .NET Core, Go, and pretty much every other popular language or framework.

Early Signs of Success or Trouble

Another big benefit of using AWS Lambda and AWS Services in the hybrid cloud is that they help you know much sooner and far more reliably whether the app you’re working on will be a mega-hit or a total clown-show flop.

That sort of intelligence is useful, no? Yes, it is, because the earlier in the game that you’re able to see where all your efforts will ultimately lead, the faster you can make the right strategic decisions.

Say, for instance, your fledgling app looks like a success-in-the-making. You can use that insight to speedily plan for augmentations and scaling (both of which AWS Lambda and AWS Services make remarkably simple to achieve). If, on the other hand, the app looks like a loser, you can make course corrections or simply cut your losses before you’re in too deep.

You’re able to know what your chances of success are because AWS Lambda and AWS Services promote and facilitate engagement with your target audiences.

For example, through AWS Lambda and AWS Services, you can draw upon the analytics power of Amazon Pinpoint to identify who in your test audience is using your beta and then assess how they’re using it, when they’re using it, and for how long they’re using it.

Just Ask—AWS Lambda/AWS Services Open a Door

However, the one thing Pinpoint won’t do is flat-out tell you what they think of your app. Is it good? Is it bad? What do they like about it? What do they hate? What do they wish was different?

To get the answers to those questions, you need to directly poll your test audience. The way to do that is either via email or with a two-way SMS text exchange. Pinpoint lets you almost effortlessly create email and text queries—the content of which you can vary as desired to request specific feedback.

Speaking of emails and texts, Pinpoint makes it child’s play to issue push notifications. With these, you can run tailored campaigns in support of your new app.

All of this (and more) is accomplished in the server-less environment of the hybrid cloud.

We Can Help You Get Started

So, again, the idea behind AWS Lambda and AWS Services in the context of app proof-of-concept is that you get to put all your focus on making your app truly great instead of worrying about the costs and other non-innovation-related matters.

In a similar vein, you don’t have to worry about costs associated with idle capacity. It’s pay as you go. The only time you incur costs is when your code is running.

Also, if your app idea works, you then can set it up such that scaling occurs automatically (to scale up manually, you check off the amount of memory and throughput you want).

Bottom line: it pays to have your head stuck in the hybrid cloud. AWS Lambda and AWS Services will help your design team get theirs up there, while keeping your feet planted on solid financial ground.

Entrance Consulting can offer you a wealth of additional insights and ideas about how AWS Lambda and all the other outstanding AWS Services can enhance your app projects and get them over the finish line faster while keeping costs way down. Just drop us a line to say hello and let us know you’d like to learn more about AWS Lambda and AWS Services.

Why People Choose Custom Software

Entrance founder Nate Richards talking about the unique value that custom software brings to business (Transcript Below) As a business why would you consider custom software today when there’s so much available off the shelf? Number one is, the off the shelf software is built for the lowest common denominator in the industry so that it can be implemented over and over and over and over. But if I want to innovate, if I have some new thing to bring to the industry the software is going to really struggle to support that innovation. Often times it has to be a custom application. Number two might be, I have some proprietary way of doing something and I’m looking to create a competitive advantage. I want to have something that my competitors don’t have. I want to give my customers an experience that my competitors can’t give them. And today that proprietary process, methodology, know-how, lives in people’s heads, lives in excel spreadsheets. By building a piece of custom software we are able to capture that proprietary know-how into an experiential component of what we give our customers. So instead of giving them a report where the result of this proprietary process is just a chart, now I can create an interactive experience where they can have this competitive advantage as part of their daily experience with our company. And finally is, so I do have all this off the shelf software in my business. I’ve got accounting software, I’ve got operations software, I’ve got my website and it really doesn’t all tie together. None of these systems talk to each other and I’m constantly finding pockets of people whose whole lives seem to be dedicated to copying and pasting, exporting and importing data to move around in this system of systems. And so by building a piece of custom software we’re able to create an overlay to unify all of these different disparate systems that are in the business.

What is AWS Lambda-And Why You’re About to Become a Huge Fan

What is AWS Lambda to someone like you who hates wasting money and losing sleep at night? Everything. Because AWS Lambda is a sweet piece of worry-free tech you pay for only when it’s actually doing its thing.

And its thing is running code. Your code.

If there’s no need for code to run, then Lambda goes silent and does nothing. It can lounge around by the pool on its tiny sliver of virtual real estate up there in the hybrid cloud services ecosystem without you footing the bill.

The “AWS” in AWS Lambda stands for “away with (traditional) servers.” No, no. It stands for Amazon Web Services. That’s Amazon, as in the massively big online retailer where vast numbers of people buy the desktops computers, laptops, tablets, and phones to access your websites and apps.

But the AWS might just as well stand for “away with traditional servers” because Lambda is a serverless hosting service. It allows you to cater to gazillions of visitors and users without having to buy or manage a bunch of physical servers and related infrastructure.

AWS Lambda vs Virtual Machines

AWS services from Amazon include an offering that amounts to an intermediate step between physical servers and Lambda. It’s EC2, and what EC2 is all about is giving you the ability to replace your traditional servers with virtual ones.

Once you do that, you avoid having to manage any physical servers, which can be a real pain in the byte if you have short-run, infrequent workloads and are strapped for money to buy traditional machines.

So, how does AWS Lambda fit into this? Let Amazon itself explain it:

“Amazon EC2 allows you to easily move existing applications to the cloud. With EC2 you are responsible for provisioning capacity, monitoring fleet health and performance, and designing for fault tolerance and scalability.

“AWS Lambda [on the other hand] makes it easy to execute code in response to events….Lambda performs all the operational and administrative activities on your behalf, including capacity provisioning, monitoring fleet health, applying security patches to the underlying compute resources, deploying your code, running a web service front end, and monitoring and logging your code. AWS Lambda provides easy scaling and high availability to your code without additional effort on your part.”

In other words, EC2 is the virtual hardware. AWS Lamba is the virtual software. EC2 management and maintenance falls to you. Lambda management and maintenance falls to Amazon.

Helpful Links for your AWS Lambda Research

Entrance’s primer on serverless architecture

Entrance’s post on how companies can use serverless to get apps off the ground faster and cheaper

n Reasons to Embrace Serverless Architecture

AWS Lambda vs Elastic Beanstalk

OK, now you’re probably wondering how AWS Lambda stacks up to app hosting services like Amazon’s Elastic Beanstalk.

In an AWS Lambda vs Elastic Beanstalk matchup, you should put your money on AWS Lambda. Actually, any money you put on AWS Lambda will be a lot less than what you plunk down on those other app hosting services.

Here’s why. Elastic Beanstalk makes you pay for uptime and availability. AWS Lambda does not.

Something else worth mentioning. AWS Lambda will let you slice your apps as granularly as you like. Can’t do that with Elastic Beanstalk. A Lambda function can be a single API endpoint, a collection of endpoints, or an entire app backend.

In addition, AWS Lambda works more easily with other AWS services. The icing on this cake is the fact that Amazon totally dominates the hybrid cloud services ecosystem. Pick AWS Lambda and you’re picking Number One.

AWS Lambda vs Azure Functions

Then there’s Microsoft’s Azure Functions, which is very similar to AWS Lambda.

Both run code in response to triggers defined by you. And both are totally compatible with other services within in their respective tribes—for example, AWS Lambda lets you easily set up triggers via Amazon’s API Gateway, DynamoDB, and S3 services, while Azure Functions lets you almost effortlessly configure your triggers with Azure Storage and Azure Event Hubs.

As for AWS Lambda vs Azure Functions differences, there are a few of note. One is the way they each handle short-term inactivity between events that trigger a code run. Another is that AWS Lambda allocates application resources to each function independently, whereas Azure Functions allocates them to whole groups.

The real tie-breaker here is that AWS Lambda is a creation of Amazon and its Web Services league of super-inventive minds. That’s why AWS is the leader in this space. So, in this cloud services ecosystem, AWS Lambda emerges as the clear victor.

Why AWS Lambda is a Must-Have

The bottom-line for you is that AWS Lambda lets you run app code on the cheap-and-easy.

What’s more, your code can be for almost any type of app—or, for that matter, almost any type of backend service.

And you can pretty much give your code zero thought once you upload it to AWS Lambda because AWS Lambda runs your code only when there’s a trigger event—and it’s up to you what that event will be.

For example, maybe the event is the placing of an online order. That’s something definitely requiring a code-directed response. Perhaps the shape of that response will be to have information about that transaction sent to your data warehouse where relevant analytics can then be generated. AWS Lambda will make sure the code it takes to accomplish all that runs, and that it runs correctly.

Or try this. Let’s say you make or sell online sensor-equipped refrigerators for the Internet of Things marketplace. It could be a long time before the buyer runs low enough on milk for that refrigerator to order more from the grocery store on his or her behalf.

But until then, the sensors probably won’t be relaying information about the milk situation. AWS Lambda knows this. As a result, it will run the code that causes a milk order to be placed only if and when the sensors call attention to that low dairy drink situation.

One last example: traffic updates. Your user who’s getting ready to head off for work wants to find out about road conditions. So he or she clicks the link at your traffic app. Your app makes a REST API call to the endpoint on the other side of the gateway. Lambda instantly shifts into gear and hits the gas pedal by running code to obtain local traffic info and then sending that news back across the gateway to the waiting user.

AWS Lambda Pricing

Again, saving you money is a gigantic piece of the idea behind running code only when pre-defined triggering events take place. This is why AWS Lambda has you paying for just the resources you actually consume.

Now, what is AWS Lambda pricing based on? It’s based on the volume of triggering events per 100-millisecond unit as well as memory allocation.Amazon offers a couple of price scenarios to show you how this works in real life.

Scenario 1: You give your app function 512MB of memory. This function fires 3 million times a month (about 1 per second). Every time it fires, the function runs for 1 full second. Your cost would be just under $19 for a 30-day billing cycle.

Not too shabby.

Scenario 2: You allocate only 128MB of memory to your function, but now it runs 30 million times a month (about 10 per second). As before, the run time is 1 second. Your cost in this instance would be less than $12 a month.

Confusing, right? So it’s a good thing Amazon offers a free calculator to figure out your monthly charges based on your exact situation. Access it at http://calculator.s3.amazonaws.com/index.html

Now that you know what is AWS Lambda, your next moves should be to sign up for an AWS account, follow the step-by-step tutorials, and use the AWS Management Console to put your code in place.

After that, you can let a big, bright smile completely take over your face—a smile that comes from enjoying the silence of the Lambda until the moments its help is actually needed. Until then, enjoy the sense of freedom that comes from not being weighed down by worries and costs.

n Reasons to Embrace Serverless Architectures in 2018

Serverless architecture is here, it’s been proven, and companies from start-ups to Fortune 500 are jumping on this rapidly evolving bandwagon. If you aren’t familiar with the concept, read this first and then come back so I can convince you why you should want this.

1 – Avoid Under-Utilization

This one should be obvious, but it is the primary reason serverless is my preferred design approach, so I’ll say it anyway. In a world in which you are paying-per-use, if you don’t use–you don’t pay. No commitment and zero upfront costs. Imagine you just launched an amazing app, and no one showed up. That would be terrible, yes, but imagine if you were also paying to keep dozens of servers idle while waiting for everyone to show up. You are clearly worse off in the second scenario.

Still confused? Serverless means you are paying per request, per unit of data stored, and per second of compute time. The more you use–the more you pay. Cloud service providers are more than happy to charge us via a metered business model. Fortunately, this exposes another benefit of serverless: cost transparency. With the right instrumentation and analytics, you could attach costs to a business unit, team, product line, or even a single end-user. This kind of transparency would be incredibly challenging, if not impossible, in traditional server-based architectures.

2 – Focus on Delivering Business Value

Serverless means no hardware to setup, no servers to provision, and no OS or database to install. Write a function, deploy the function, and execute the function. Alternatively, you don’t have to reinvent the wheel or rollout DIY functions. There are services on the market for various tasks: authentication, SMS, marketing and transactional emails, user analytics, customer payment, AI, and so many more. This kind of rapid development means you can quickly prototype an idea and get it into the hands of your users sooner. This reduces the time it takes to realize the business impact (good and bad) and allows you to experiment with more ideas at a much faster pace.

3 – Skip Hardware & OS Overhead

This one is right in the name. No servers in production means no servers to manage. AWS (Amazon), Azure (Microsoft), and Google are more than happy to take our money in exchange for this abstraction. Personally, I am more than happy to check some boxes and hand over my money. Security & monitoring? Taken care of. Patch Tuesday? Not my problem. Broken server? I’m not thinking about it.

This is not to say there is nothing to think about, but your internal IT team and developers (if you have any) can focus on more interesting problems.

4 – Seamlessly Scale to Infinity*

Maybe not actual infinity, but you can scale to incredibly large loads and volumes using services likes Lambda and DynamoDB (AWS). If your needs are beyond the default AWS Lambda limits (1,000 concurrent function executions), then I’d like to hear from you. Keep in-mind that 1,000 concurrent executions could translate to 5,000 executions per second if each function takes approximately 200 ms. That is before implementing a cache layer or using multiple AWS Regions.

For fun, let’s run a function 5 times per second for an entire month (30 days), which would result in 12,960,000 Lambda executions.Assuming 200 ms compute time @128 MB of RAM, it would cost exactly $7.99. Breakdown: $2.59 for the requests, and $5.40 for the compute time.

You can compress the timeframe and still only pay $7.99. In other words, could your data center handle an additional 12.9 million requests across a single month? Maybe. What about an extra 12.9 million in a single day? In a single hour?

Economies of scale allow us, non-cloud providers, to tap into immense power and flexibility on-demand at significantly lower costs with little-to-no commitment.

5 – Events over Data

The last decade has been largely focused on data. Bringing it to the web. Pushing it to the cloud. Visualizing, analyzing, and dumping it into cubes. Part of this effort was justified because sometimes it takes a ton of data to answer the certain questions. Having said that, it might make sense to think of the business problem as a series of events instead of the aggregation of data.

Imagine a pipe. Now imagine “stuff” flowing through this pipe; product purchases, invoices received, creation of new customers, support tickets, and application errors. Many different applications can be pouring events into this pipe, and many applications can be watching and responding this stream of events. You may have heard of stream processing or log processing; this is approximately what they are referring to. This idea aligns quite well with philosophies like Agile Development and Domain-Drive-Design. Create a bunch of producer (event creating) applications. Later down the road as requirements and needs become clear; create various consumers (event readers) to analyze or respond to the events.

Jumping back on topic, serverless architectures allow us to run with this idea incredibly quickly and with minimal commitment. AWS, for example, already has the infrastructure in place to: create streams via Kinesis, create functions via Lambda, and create rules to execute specific functions based on the events in the stream. This drastically reduces the amount of “glue” code we must write to get the ball rolling. Our functions become succinct poems and are focused on solving business problems.

For the record, you could implement an event-based architecture in a traditional server approach, but scaling and/or accessibility would likely become a problem. I am also willing to bet it would take longer to get started.

“Conclusion”

There are many other benefits to serverless architecture, but they all revolve around cost reduction, faster delivery, and rethinking how we solve problems.

What is Serverless Architecture?

The fundamental theme is to get servers out of the path of software development and focus on building features unique to your product or solution. However, there are several competing cloud providers and a rich marketplace of third-party services, so it can be difficult to navigate when trying to get started. There are also several tiers of serverless offerings, ranging from the DIY to the fully-serviced platforms.

If you are already familiar with this concept, and want to know why you should care about it, Read more here.

Functions as a Service (FaaS)

In its lowest atomic form, we have Functions as a Service, or FaaS. In this environment, we deploy functions to a provider, AWS Lambda for example, and pay per execution and per compute time (measured in 100ms increments + tiers of RAM). Google has Cloud Functions, Microsoft has Azure Functions, IBM has OpenWhisk, and there are several smaller vendors who focus solely on FaaS offerings. The easiest path to deployment is to write your functions in JavaScript using the Node.js runtime, but you can also use Python/.NET/Java (experience may vary between provider).

On top of manually invoking the function via a CLI (command-line tool), the cloud providers let you trigger your functions via events from their other services: respond to file storage or database events, handle HTTP requests via an API gateway, or handle items as they pass in/out of a queue. This allows the architecture to focus on tackling the interesting problems, and leave the IT/scaling/glue problems to the various cloud providers.

Service-as-a-Service

You are likely already familiar with some Service-as-a-Service offerings; SendGrid and Twilio are commonly used by many applications to handle transactional emails or send/receive SMS. Despite being free, Google Analytics is a great example of SaaS with its web-traffic analysis tools. This list goes on and on: authentication services, error logging, payment gateway, media management, and full-text search engines. Got the idea? Plug into an existing API, pay some money, and check off a task from your backlog. It is also worth pointing out that these products are much more robust and feature-rich than something you will be able to build yourself.

Platform-as-a-Service (PaaS) & Backend-as-a-Service (BaaS)

The definitions start to get blurry between Platform-as-a-Service and Backend-as-a-Service, but in this context, let’s just treat them as one. In this upper tier, you can get a single facade with many FaaS/SaaS offerings baked into the product. This approach tends to be a bit pricier than the first two, but it makes up for it with convenience. The one-stop-shop can be a bit overwhelming at first as you try to figure it out the XYZ way but also a bit freeing when many of your application’s core components have already been solved. If you are working on a greenfield project, take a look at PaaS/BaaS especially if you are making a mobile application.

As with all things in technology, there is no perfect or right answer to which X-as-a-Service direction is better. Personally, I prefer combining FaaS + SaaS to rapidly create my projects, but I am a developer at heart and enjoy designing cost-efficient scalable applications.

How Lightweight, Low-Cost Tools Allow Small Businesses to Create and Market Custom Software

Our Engineering Manager, Kenny, recently wrote an explanatory article on a modern approach to software development that we’ve created that embraces open-source tools and services. This approach minimizes server footprint and licensing costs, spreads up-front costs over the life of the product, and ties expenses to product usage.

Kenny’s article explores the technology that makes all this possible. For this blog, I wanted to reflect on what this approach means for business. I’ll explain how using this approach lowers costs overall and lines up costs with returns, and then walk through a few example use cases to show situations where small business may want to consider going this route.

Lower Overall Costs

Have you ever noticed that phones keep getting better and better, but that they stay about the same price? Innovation in manufacturing processes and increases in productivity mean that we can get more for less. The same is true on the software side of technology. Experts in the field are always finding better ways to do things, and engineers are better than ever at reincorporating these improvements into the software canon as reusable code packages and cloud-based services.

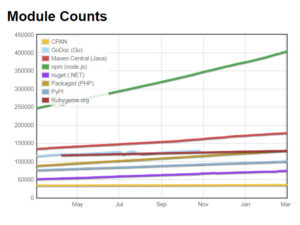

As a small business, you can take advantage of these improvements but only if you are using the right toolset. With a JavaScript codebase and a Node.js backend, our developers have access to npm, a repository of code packages that are available to use in new projects. According to Module Counts, a website that monitors the relative popularity of several package managers, npm is far and away the most popular repository of its kind in terms of number of packages available.

According to www.modulecounts.com, npm is by far the most popular distribution channel for code packages, and its uptake by the community is only increasing.

Aligning Costs with Revenue

In a traditional development model that uses tools like Windows Server, SQL Server, and virtualization software, licenses must be purchased up-front. If you host these servers yourself, you must also purchase the associated hardware. Finally, you must factor in the configuration time that will be spent to spin up these servers.

These outlays take place at the beginning of the project, before any benefits from the software are realized. This leads to a related problem—it’s easy to incorrectly estimate your hardware and software needs, leading to over-investment at the start of the project or an underperforming environment that you must expand at a later date.

A better approach is to align project expenditures with benefits. To help our clients do this, we use cloud-based services instead of on-premises hardware. We rely on tools that scale automatically, cost more when they are used more, and are low cost or free at low usage volumes.

The upshot is that costs are spread over the life of the product instead of being paid up front, and if the product isn’t adopted as quickly as expected, costs stay low. In the best case scenario, the product catches on like wildfire, in which case the services will scale to meet the challenge automatically.

Use Case 1: Outgrowing Excel

If you use Excel to deliver a product to your customers and you are experiencing growth, there will come a day when you will need to move to a more sophisticated tool. Of course, Excel continues to be useful even in large companies for ad hoc data manipulation and reporting. What I’m referring to here is businesses that use Excel to manage key processes or use it to produce an intellectual product that is then sold to customers.

We recently conducted a project for a small consulting firm that used Excel in the past to organize electricity price data for businesses and give recommendations based on that data. They would generate reports and email them to customers. Because the knowledge they were sharing was valuable, they were in high demand. As an avenue for growth, they decided to move from Excel to a more advanced framework to support their core product. They chose to make this move for several reasons.

First and most importantly, it is time consuming to manually copy and paste data into Excel and then manually generate reports to send to customers. These activities are low-value and easily automated, ones that certainly should not be performed by a company’s key employees. Creating a system for importing data automatically and then allowing customers to self-service the reports solved this problem.

Second, Excel does not impose the required rigor for manipulating data as companies grow. In an enterprise-grade application, data storage is separated from the rest of the application, and data is only allowed to be altered through a defined set of methods. This protects the data from inappropriate or inadvertent changes. Excel, on the other hand, allows users to change data directly, which can lead to costly mistakes, especially as more users handle the workbook.

Finally, Excel allows users to tweak data, finagle or even ignore formulas, and perform various circumventions that are difficult to track or understand. These adjustments are made by knowledge workers drawing on years of experience. How do you train a new user on the system if they don’t have years of experience and a well of tacit knowledge to draw from? It’s not possible. This impedes scaling the product and growth of the business. Moving to a system with a well-defined business logic layer forces your knowledge workers to codify all that information tucked away in their brains so that others can take advantage of it.

Use Case 2: Allowing Customers to Self-Service

There are only a handful of methods to increase the value of services provided by a firm, and one of them is to offload work performed by the firm to the customer. Picture those new self-service lines at the supermarket.

In the past, we’ve worked with companies that built internal systems to produce some reports or data that was relevant to their customers. When a company decides it’s time to stop putting in the work to provide data to its customers out of an internal system, and instead opts to have their customers self-service, you’re talking about a Customer Portal.

Although the finished product is complex, a multi-tenant customer portal is mainly constructed from existing services stitched together like patches in a quilt. Users should be able to log in to the system, reset their passwords without your involvement, and access their information (but not other people’s information). Your administrators should be able to manage tenants and users. All this can be accomplished using cloud-based servers and data storage, services for authentication and email, and freely available packages to jumpstart the coding process.

As mentioned earlier, it’s getting easier for small businesses to build this kind of portal. This is great for you as a small business, and it’s great for your competitors as well. Should you choose to not put in the effort and money to get something like this done, you can bet one of your competitors will. Would any existing customers switch if they could access up to the minute information any time they wanted instead of waiting on you to send it? Would it make a difference to a new customer trying to decide between you and your competitors?

Use Case 3: Productizing What You Do Well

If you are growing as a business, you can probably point to something that you do better than your competitors. Maybe your model is a little different. Maybe you are unusually consistent in your manufacturing or service delivery. Whatever that difference maker happens to be, you should ask yourself if it’s something that can be productized and sold to others in your industry.

McDonald’s has a market cap north of $100 billion. What does McDonald’s do better than anyone else? Consistency. How they run their stores, order product, train employees, and greet customers is all mapped out in detail and executed consistently. Rather than bogarting their competitive advantage, they’ve chosen to license it out in the form of franchises. While they could have stuck to only corporate stores, and some concepts do, the largest fast food chain in the world got that way by productizing what it does well.

In the software world, first define what your advantage is and then decide if it’s a good candidate for productizing. A business process, a proprietary database, a methodology for identifying good customers, a homegrown ERP system: all of these things are prime targets for turning into software.

Business processes can be converted into workflows, databases can be exposed through reports, customer classification techniques can be codified, and ERP systems can be re-architected to operate at scale. Around this core of value, we build the “plumbing” that makes it a product, including secure multi-tenancy, payment processing, messaging, and administration.

4 Ways Engineering and Construction Firms Use Big Data

HOW SMART, TECH-DRIVEN ENGINEERING & CONSTRUCTION FIRMS ARE USING BIG DATA TO MAKE BETTER DECISIONS AND REDUCE PROJECT COSTS.

“Big Data” is one of those buzzwords you hear a lot in the tech world, but what does it mean for the engineering and construction world? As our business moves into the digital world, we’re amassing huge amounts of data: everything from reports, to GPS data, RFID equipment tagging, digital pictures, digital plans, sensor readings, time logs, depth gauges, etc. This collection of digital assets is “Big Data”.

In the modern digital world, big data is a building material you use to drive your firm’s entire marketing and management strategy. The rubber meets the road when you can analyze big data to recognize trends, associations, and patterns in your business and the marketplace. In the engineering and construction industry, this kind of technology is used to perform different tasks related to data management, pre-construction analysis, etc.

The ability to quickly analyze enormous amounts of data is a business asset that provides valuable insights that may be crucial for your success. Engineering and construction firms often undertake several construction projects at a time, all of which require great attention to detail.

Even the slightest problem with scheduling, research, or measurement could lead to losing a particular project or client. Since each project requires construction companies to collect, organize, and analyze large amounts of data, handling several projects simultaneously requires meticulousness that good data management provides.

What follows are four ways successful firms collect and analyze a vast amount of data in order to stay ahead of the curve and remain competitive.

#1 – Monitoring Sensor Data for Structural Integrity

Let us look at a common example: the construction of an office building. Sensors can be fitted into the structure to provide real-time readings related to temperature and humidity (among other factors). Furthermore, the readings can be transmitted automatically to cloud storage, where you can access it from any internet-enabled device.

As more and more readings and related information accumulate, all of it becomes Big Data, and you can begin to use historical data for things like predictive analytics. All of this makes it much easier to take proactive, prescriptive refurbishment measures when you know similar circumstances results in degradation of building materials over time.

Most successful industrial manufacturing companies are already using sensors for exception monitoring to prevent unexpected structural problems. Imagine if a bridge, building, or road could send you an alert when it needs your immediate attention. The applications and usage scenarios here are endless.

#2 – RFID Data for Equipment and Materials Tracking

Construction has never been simple, but today it can be unbelievably complex. There are countless factors to keep track of. This includes all the materials, tools, and equipment being used and their locations around the sites.

Fortunately, modern technology is becoming part of the industry and is evolving to meet engineering and construction needs. With the evolution of RFID technology, it has now become both viable and necessary to include it in your everyday operations.

RFID tracking can be implemented within a reasonable budget, and it will help you not only “keep tracking of everything” but also with acquiring useful Big Data from all of the tracking.

#3 – Field Data Collection

This is probably one area where we see too many construction projects stuck in the 20th century. Many are still using paper-based forms and clipboards to record field data.

If you think about it, there is no valid reason to resist upgrading data collection to digital methods. In fact, it is a near impossibility to gather Big Data manually. There are certain types of data that are impractical to collect in paper form.

Converting those paper-based forms of data into digital data is an additional unnecessary cost for your business.

If you’ve been postponing the transition to digital field data collection, there is probably a reason.

Perhaps you think training efforts will be too much work. But rest assured, many of them are already using their smart devices to chat with their spouses, post Facebook and Twitter status updates, and more. If you implement the right workflows, capturing field data with those same devices will be effortless. At Entrance Consulting, we have a lot of experience with helping construction and engineering companies go digital. Feel free to contact us and see how we can help you make the transition.

#4 – Project & Safety Risk Mitigation

Predicting risks is something any serious construction and engineering company needs to consider to be profitable. One of the best ways to mitigate risks is through effective analysis of Big Data.

Big Data analysis provides insights that improve costs and efficiency, identify potential problems, and devise ways in which any problem can be treated.

Using Big Data analysis to predict risks improves the understanding of several aspects of construction work, improving the decision-making process in the end.

Our software expertise

Over the years, we have built a team that designs and develops modern applications for engineering and construction companies. We also help you customize and implement any of the major software solutions you’ve heard about. If you need any help with software, custom or otherwise, we’re here to make it happen.

We specialize in software development, so you know what you’re in for when you choose to collaborate with us. In the past, we have worked on and solved complex engineering issues, including:

- optical throughput losses calculation

- analysis of pipeline failure

- geophysical data management

We can automate the process of finding solutions to any problems you might face. In other words, our software will enable more effective decision making within your company and will also save money in the long run.

The power and ability of Big Data to transform any business is undeniable. Fortunately, purchasing expensive software or technology is no longer a necessity, since there are professionals like the ones at Entrance Consulting. We can take care of the entire process of complex data analytics and management, as well as handle business intelligence for you.

Taking the SharePoint Framework for a Test Drive, Part 1

The developer preview for SharePoint Framework was announced late last week, so I decided to work through the ramp-up tutorials and offer my initial impressions. I hope this will help you avoid a couple of stumbling blocks that I encountered and get you thinking about this new (and imo, very cool) way of approaching SharePoint customization.

If you’re not familiar with what the SharePoint Framework is, check out this article.

I followed the directions at https://github.com/SharePoint/sp-dev-docs/wiki/HelloWorld-WebPart to get started. I had two takeaways right off the bat. The first is that the Yeoman template is super helpful for getting spun up quickly, especially since your build process comes fully assembled. The second is that the starter project is HEAVY. The project folder (node modules included) is 244MB

While it’s inconvenient to wait 5 minutes while Yeoman spins up your project template, it’s unlikely to be a problem, as a lot of the node modules will just be used to compile and build our application. We’ll withhold judgement on the first point until we see how big the actual built bundles come out.

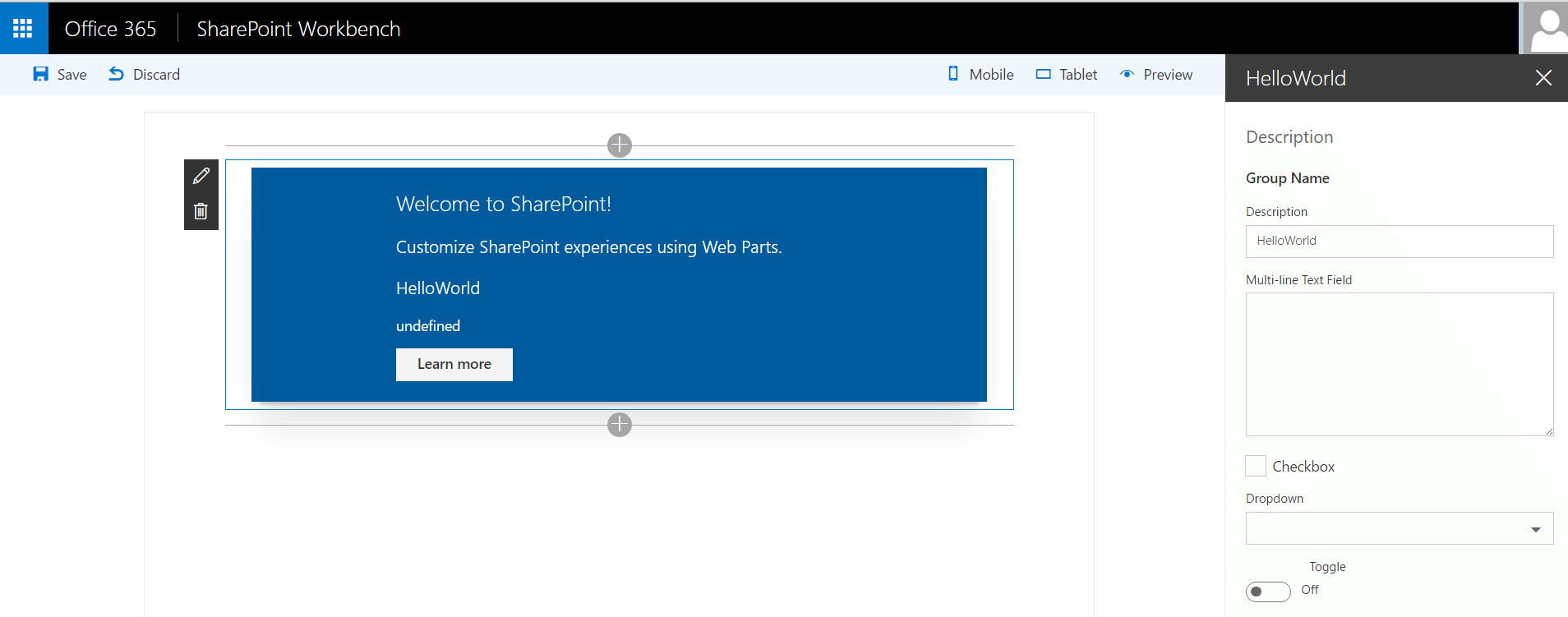

I entered ‘Gulp Serve’ The project built locally as promised, and I was able to load my web part locally. My first impressions are that the layout looks very modern. If this is what’s in store with the new page experience, we’re on the right track. As I made changes, they were automatically reflected in the page, a very handy inclusion in the build chain. The ability to easily change the items included in the web part properties panel will be really useful for making web parts portable. For instance, it was not easy to specify a source library in an app in the past. This makes it much easier.

The workbench also gives you the option to see how your web part would look on mobile or tablet, which is good because a big selling point of the new client side web parts is their responsiveness and ability to play well within the SharePoint app.

Looking back at the size of the dist folder puts my mind at ease; it’s less than half of a MB.

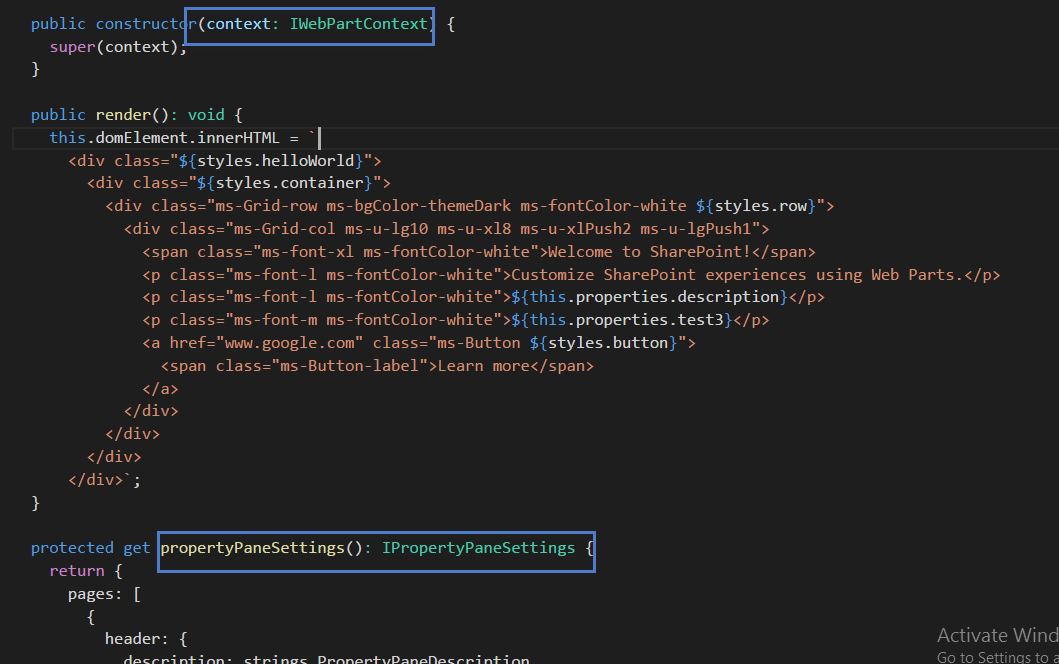

Looking through the code structure, it’s clear that a proficiency with TypeScript will be helpful when developing these web parts, at least if you’re using the Yeoman template. Using interfaces in JavaScript is something I am not accustomed to… time to hit the books!

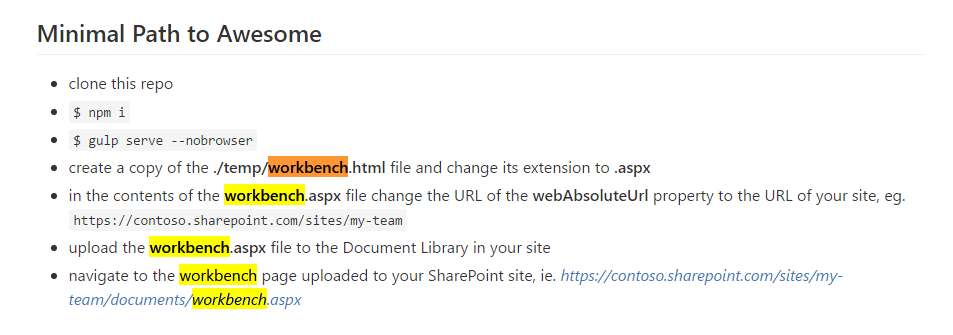

Unfortunately, the instructions for viewing your web part live in SharePoint are lacking. The full steps are outlined here, and require you to change the file extension on workbench.html, change a bit of code within it, and upload it to your dev site.

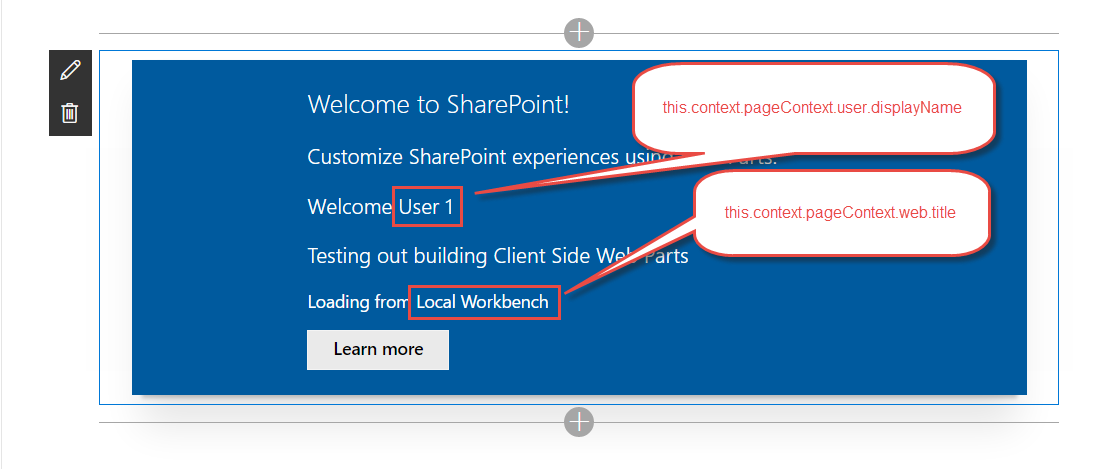

It was a straightforward task to access page context information within the web part class since assignment of those variables is handled automatically in the base class constructor. All you need to do is call ‘this.context.pageContext.user.displayName’, for instance, to retrieve the user’s name. That seems like a lot of typing, but the intellisense in Visual Studio Code is reliable when using TypeScript, and we don’t have to worry about the page context object not existing or certain SharePoint libraries not being loaded. No more ‘sp.sod.executeordelayuntilscriptloaded’, hooray!

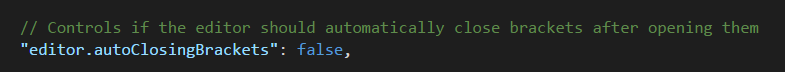

One thing that is a little befuddling as I was working through the second section of the tutorial was that my closing braces were not automatically being generated like they usually would be. Taking a quick look at the settings.json file on the project uncovered the culprit. Apparently this is a setting that comes with the project. I certainly didn’t make this change.

One thing that is a little befuddling as I was working through the second section of the tutorial was that my closing braces were not automatically being generated like they usually would be. Taking a quick look at the settings.json file on the project uncovered the culprit. Apparently this is a setting that comes with the project. I certainly didn’t make this change.

Maybe there’s a good reason why this should be set to false, but it seems really odd that a project template would ship with settings that are more personal preference than anything else.

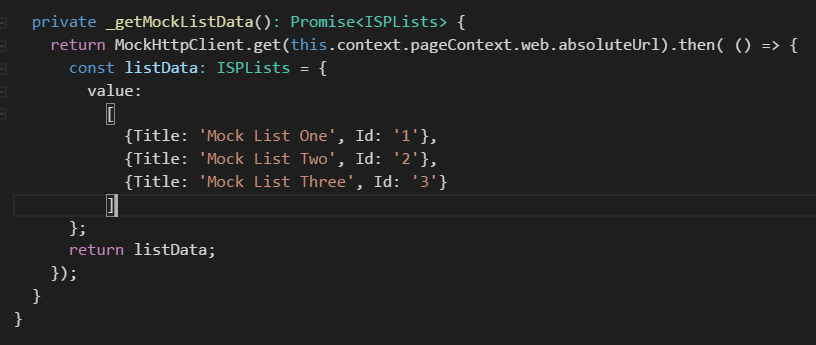

The next section of the tutorial has us creating a mock http request class, since we cannot access actual SharePoint data when working with the workbench. I get the sense that this will be a normal part of development using the SharePoint Framework. Code your web part offline, stubbing out the data retrieval, and then wire it up at the end.

As someone who is not accustomed to writing TypeScript and who has not used the Promise type in ES6, the method of data retrieval on display is a little intimidating. I would recommend developers look into these subjects as they get ramped up on the Framework.

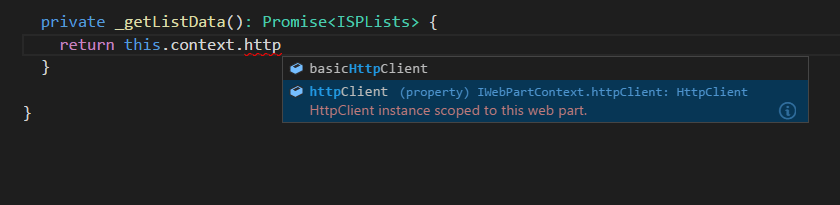

Next, we put in a method to get all the lists from the actual SharePoint site (in other words, live data). Per the tutorial, this method makes use of a call to the REST API. This is interesting, because new developers learning the framework will retrieve data from SharePoint for the first time not by using JSOM or CSOM, but through REST. As someone who uses the REST API in SharePoint almost exclusively for data retrieval do to the transparency and control it grants, I like this a lot.

Another cool feature I’m seeing is that there is an httpClient property on the context object associated with the web part. The description of the property is that it’s an instance of the HttpClient class scoped to the current web part. That is too cool. It (hopefully) eliminates the need to include jQuery just to do AJAX requests, and it (hopefully) allows for tons of reuse between your web parts when it comes to querying SharePoint.

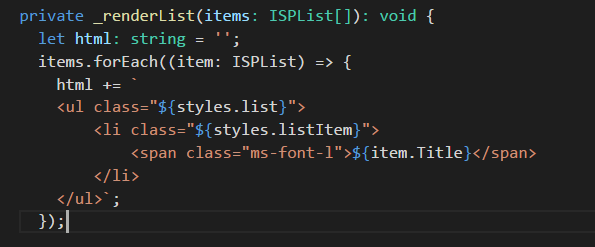

The remainder of the tutorial has us rendering the list data we retrieved to the screen at the bottom of the web part. I had two takeaways from this section.

First, it was neat that there was an environment variable so you can easily differentiate between offline and online work. By using the statement `import { EnvironmentType } from ‘@microsoft/sp-client-base’`, you gain access to a variable on this.context.environment.type within your webpart class. This allows you to do one form of data retrieval if you are on workbench and another when you are live in SharePoint. The way I see using this is to do as I mentioned earlier, make a first pass offline with stubbed out data, and then implement the data retrieval one method at a time. By using environment type, you can switch back and forth between online and offline during this process and everything will continue to work.

Second, check out how we’re getting our list info to render:

Yeah… I will be checking out the React project template that they have very soon.

That takes us to the end of the second section of the SharePoint Framework tutorial. I’ll continue on and share takeaways from the next section(s) in an upcoming post.

5 Reasons to Implement an Analytics Platform for Your Engineering & Construction Firm

Engineering and construction (E&C) projects require a lot of resources and generate massive amounts of data, but historically the E&C industry has lagged behind in comparison to other industries when it comes to embracing technology. Companies going against the grain to take advantage of big data and analytics are seeing significant results and gaining competitive advantage over their adversaries. Here are five reasons the engineering & construction industry should embrace an analytics platform:

- Reduced rework, waste and project delivery times. According to an article by Forbes, material waste and remedial work make up 35% of costs for construction companies[1]. Without modern software technology to augment and streamline designs, revisions, and reporting, minor changes could take weeks or even months of back and forth communication with engineers, architects, and others involved. Technology can decrease design and revision times tremendously and ensure that your builders always have the latest version of the plans to work from, which can also mean less re-work and material waste. JE Dunn’s data driven system helped them save $11 million on a $60 million civic center construction project and shortened completion time by 12 weeks1.

- Information at your fingertips. It’s common for data to get stuck in a bottleneck at a specific person, department, or tucked away on someone’s computer hard drive, or lost in a sea of emails. Accessing that data means waiting on other people to provide it, and often the data is already outdated by the time a report is put together. Tools such as Sharepoint can minimize this problem, and deploying your documents to an accessible host via a Sharepoint migration can help increase transparency. With systems now moving to the cloud, that data can be accessed by anyone who needs it whenever they need.

- Visualization. Many companies still use spreadsheets to share company information, but how much information do you really get from looking at a row of numbers that are a mile long? As more and more people experience data through visualization tools such as Spotfire and Tableau, spreadsheets seem increasingly slow and cumbersome in comparison. Data visualization tools allow you blend multiple data sources in real time and identify visual trends and patterns immediately. Many of these tools also allow you to “drill around” information, so you can view data by project, region, manager, or any combination of specific fields you might need to identify the cause of a problem and get real-time answers to any questions that arise.

- Mitigate Risk. Being able to quickly gather and view past data allows companies to evaluate and prevent risk in the future as well as identify opportunities for improvement.

- Competitive Advantage. Analytics can be used in a variety of ways to improve efficiency, reduce costs, create better estimations and provide project transparency. Research by both Forbes and Ernst & Young revealed that “top performing organizations saw tangible business benefits from data and analytics, giving them a competitive advantage over rivals.”[2]

These are just a few of the ways big data and analytics can benefit your organization. If you are interested in learning more about how big data and analytics make it easier (and cheaper) to identify and manage project costs before they become a major problem, check out our Cost Control Analytics eBook!

If you have any questions or would like to discuss some different ways technology can help give your company a competitive advantage, schedule a quick call: Click here to book a brief call on my calendar!

[1] http://www.forbes.com/sites/bernardmarr/2016/04/19/how-big-data-and-analytics-are-transforming-the-construction-industry/#67dbea3a5cd0

[2] https://betterworkingworld.ey.com/better-questions/transform-analytics-driven-organization

5 Secrets to Client Happiness

There’s something exciting about a new business opportunity or new project, especially if you are in a client facing work environment. The possibility of adding value through products and services and/or business relationships is exhilarating. However, maintaining client happiness and building a lasting working relationship can become a challenge when the project hits snags, or there are staffing changes.

As a business analyst, I often find myself concerned about maintaining client happiness in addition to delivering a quality work product, and I’ve learned a few things along the way. Here are my top five secrets for keeping my clients happy:

-

Understand your client’s expectations:

You cannot hit an invisible target, right? Well the same applies to your client’s expectations. Make sure you have clear understanding of what they expect from you before starting the project. This helps you determine if you are meeting their needs. Often times we are held accountable for things, we did not know we were expected to do. Whether you are in software development or retail services, there is value in knowing your client’s expectations. You will never meet those expectations if you do not know what they are. Also, do not be afraid to ask. For example: What are you expecting? How often do you need this? Should we change this approach?

-

Be Responsive:

Being too busy to respond to a client is simply unacceptable. Who’s not busy? Find a way to let the client know you hear them and you will get back to them with a solution or response as soon as possible. Some people will go quiet until they have everything worked out. Guess what: that is not good enough, and is often the absolute worst way to handle a tricky situation. Even though you have completely solved the problem, the client does not know this and is more likely to say Thanks but No Thanks because they thought you abandoned them in the interim. Get in front of the issue, let your client know that you are on the case.

-

Be Honest About Hard Truths:

If you are asked to provide something that is not possible, do not be afraid to tell your client it’s impossible or risky. Give them alternatives or give them repercussions, for example, if we do this <impossible thing> then this <unwanted thing> will happen. Most clients will appreciate the honesty, even if the answer isn’t what they wanted to hear.

-

Client Appreciation:

Everybody on the planet likes to be appreciated once in a while. Just because you landed the deal, there is no reason to stop showing appreciation to the client. There is nothing wrong with an occasional lunch or dessert surprise. Clients love to receive lagniappe (free stuff). Sometimes it’s the little things. They may not remember when you worked all-night or over the weekend to meet a deadline, but they will remember the time you brought donuts or cookies to tell them how much you appreciate their business.

-

Be Nice. Always.:

I was always taught to kill’em with kindness. As a child, I did not understand that statement but as an adult, I get it! This doesn’t mean that you should let your client have anything and everything they want, but when your client asks for things that are out of scope or simply misunderstand the process, be nice when you redirect them. Nobody likes a jerk, even when they need to be set straight.

Remember, get an understanding of what your client is expecting, sometimes expectations change over the course of time. Be Responsive, the excuse “I was too busy” will not cut it. Be honest. Most people will appreciate and respect you for it! Don’t forget to show your client that you appreciate them. And, don’t be difficult

In addition to providing a quality work product, these are all the key secrets to having a good professional relationship with your clients. I hope you’ve found this helpful. If you have any questions, feel free to send me a note!

New Level of Detail Expressions in Tableau

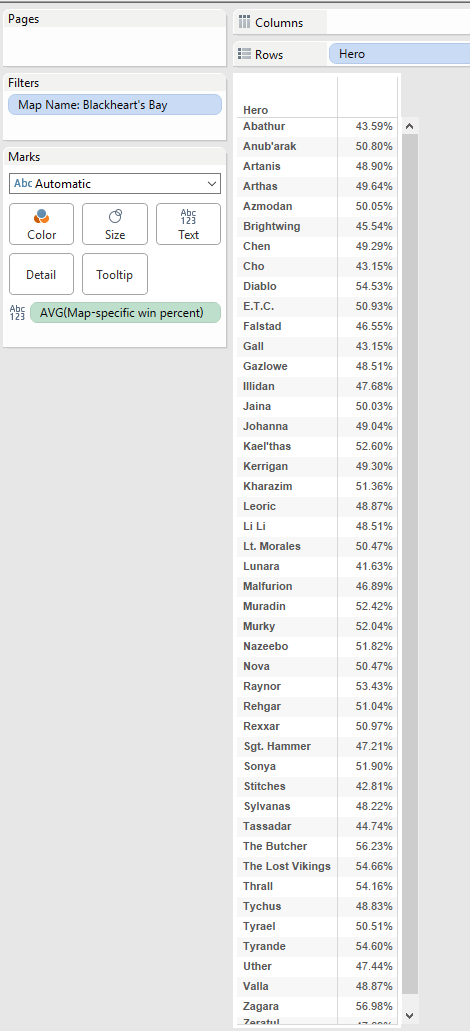

Level of Detail expressions or LOD expressions help users to specify the dimensions used in a calculation without having to include them in the visualization. To showcase the new level of detail expressions in Tableau, we’ll be looking at a dataset containing records about a PC game called Heroes of the Storm.

Each game has a total of 10 players divided into 2 even teams. There can only be one winning team and one losing team. Each team consists of 5 unique characters (aka heroes) controlled by 5 unique players. Each of these players has a rating called MMR that the game uses to measure the players’ skill. The heroes in the game fit into 1 of 4 categories: Tank, Assassin, Specialist, or Support. Each game can take place on 1 of 10 different maps. With the background out of the way, let’s take a look at how these new expressions can help us.

There are three new level of detail expressions in Tableau: FIXED, INCLUDE, and EXCLUDE.

Today, we’ll learn about FIXED. This expression is used most often when you’re calculating a value that uses data outside of the view level of detail. This means that your view is only returning results for a subset of your data, but you’d like that subset to be compared to more than what is shown in the view.

For the first example, we’ll be trying to answer the question, what is each hero’s win rate on a specific map vs their average on all maps? In the context of retailers, this could read as, “What is my profit on a product in a specific state vs the product’s profit in all 50 states?”

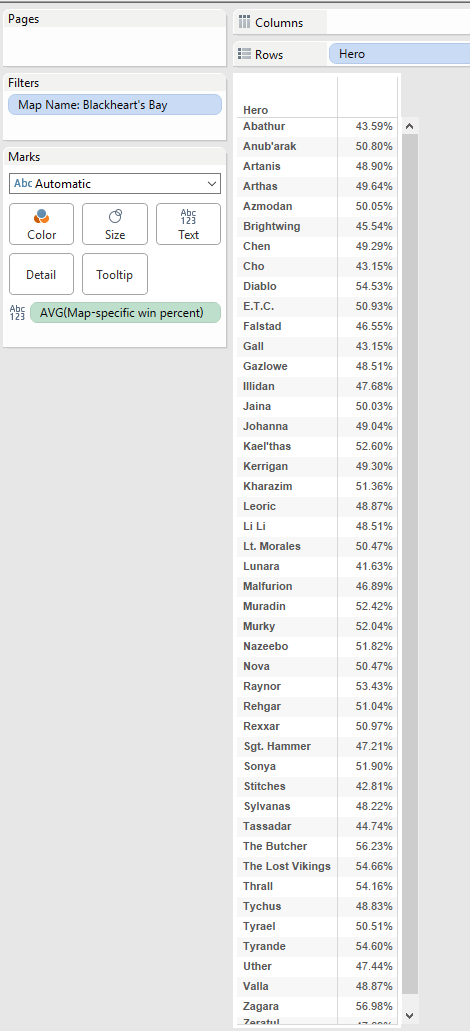

You’ll see in this example that we have created a visualization that shows every hero’s average win rate that abides by the map filter. However, we also want each hero’s over all win rate that is map agnostic.

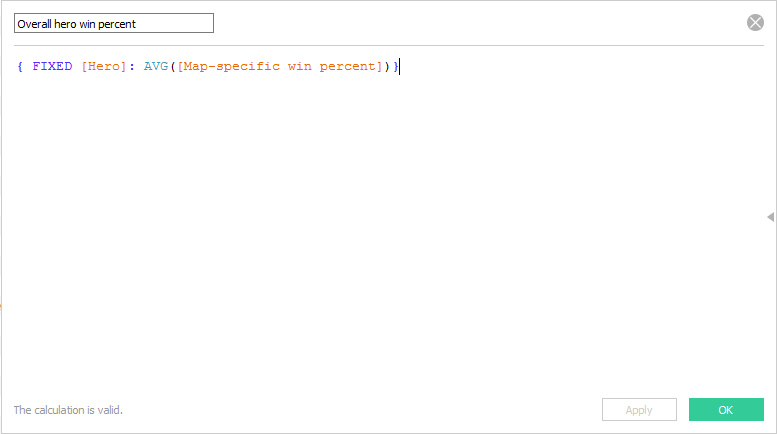

In order to accomplish this, we’ll use the FIXED formula. The FIXED formula looks like this:

{FIXED [<dim>] : <aggregate expression>}

Whichever dimension we fix will be the only dimension taken into account when calculating our measures. In our example, we only care about each hero. We don’t care which map the game was played on, we don’t care about the hero’s class, or any other dimension.

In our case, we’ll use {FIXED [Hero]: AVG([IsWinner])}. If you’re familiar with SQL, this reads like a WHERE clause, i.e. WHERE [Hero] = ‘<Hero Name>’.

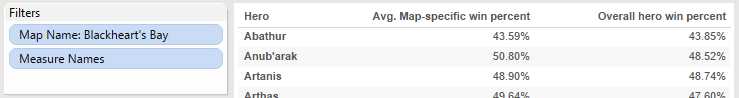

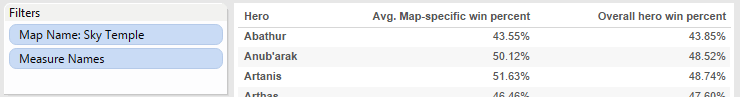

Now, we can adjust the map filter and see each hero’s average win rate per map compared to their overall win percentage. Notice in the example above that the overall win percentage hasn’t changed, while the per map percentage does change as we adjust our map filter.

If we wanted to take this a step further, we could create a calculated field that took the difference between their overall win rate and their map specific win rate. A positive result would reflect that they were, on average, better at a specific map and a negative result would reflect the opposite.

Final thoughts on FIXED:

- Your FIXED calculation can have multiple dimensions in it

- Using FIXED ignores view-level filters, but does not ignore context filters, data source filters, or extract filters

- Using FIXED will convert a measure into a dimension

- Using FIXED simplifies the process when comparing measures in your view to aggregate measures in your data source

Credit to Ben Barrett and the rest of the team from www.hotslogs.com for making the Heroes of the Storm data publicly available.

To learn more about level of detail expressions in Tableau, and to see some practical business applications, check out their blog post.

Improving SharePoint User Adoption Email Course

We’ve launched a 7-day email course about improving SharePoint user adoption that teaches you how to build a SharePoint intranet site that people actually want to use.

Earlier this summer, my colleague, Ben Smith and I gave a webinar presentation on increasing SharePoint user adoption to improve the return on your SharePoint investment. Not everyone has time for a webinar, but you might have time for one lesson a day, delivered by email. If you’re ready to learn how to build a SharePoint site that people actually want to use, Entrance is now offering a free 7-day email course based on Ben’s course material all about increasing SharePoint user adoption.

The course covers 7 key areas:

- Understanding the Needs of Your Users

- SharePoint Brand Standards

- Communication

- Identifying Departmental SharePoint Champions

- Training Users to Encourage User Adoption

- Measuring Engagement

- Maintenance & Governance