Agile Methodology: User Stories

In the past few weeks we’ve been discussing Agile methodology to iteratively develop custom software that better meets the need of our customers. Probably more important than this concept, however, is to to define the business need before we ever begin a project. The way we accomplish this is by creating user stories.

RFID Technology and Oil & Gas: Streamline with Custom Software

Oil and Gas RFID Software

We are all pretty familiar with RFID technology, as most big ticket items we see at the store are protected by them. When it comes to the oil and gas industry, the uptake has been much lower, which is surprising when you think about how much more they stand to lose. If a pipe goes missing, or even worse, doesn’t get needed repairs because nobody could find it, the stakes are upwards of thousands and millions of dollars.

How to Prepare Your Business for a Software Transition

Keeping up with disruptive technology is essential for business success in today’s evolving marketplace. Learn why a software transition is necessary and how to implement one seamlessly.Read More

Chatbots as an Alternative to Traditional Apps

Not every application needs a GUI. Imagine an app which the user doesn’t have to install, where the user interface has already been done for you, and where you just have to focus on the logic. That’s a chatbot application. If the user basically needs answers to questions, a chatbot may be the best solution.

How Chatbots Work

A lot of people regularly use conversational platforms. They use messaging apps like WhatsApp, Facebook Messenger, and Apple Messages for personal conversations. Also, they use Slack, Microsoft Teams, and similar environments for business. Almost everyone is familiar with SMS. Therefore, engaging with a chatbot is a familiar experience for many people.

Providing a chatbot where the users already are is a great way to engage them. There’s no need to get them over the psychological hump of downloading and installing an app. If the user is already logged in, it saves the trouble of adding login and authentication code.

Chatbots vary hugely in power and complexity. The simpler kinds don’t take much programming effort. The more sophisticated ones come close to engaging users in a real conversation. There are three main types of chatbots to consider.

Menu-Based Bots

This type presents a list of choices for the user to pick from. It’s similar to what you’ll encounter in automated phone answering systems. It might start by asking you if you want to (a) make a purchase, (b) inquire about an existing purchase, or (c) check your account status. It can’t actually converse with the user, but it can accept selections and inputs. If it can’t handle the user’s request, it will give a website or a phone number for assistance.

Keyword Recognition Bots

Bots that rely on keywords can’t really understand what the user is asking, but they can fake it pretty well by recognizing keywords in the question. Words like “account,” “received,” and “broken” let the bot guess the general area of the query. They ask the user questions like “Do you want to check your shipment status?” to make sure they’ve understood correctly.

Bots That Parse Queries and Use Context

These are the hardest bots for developers to implement, but they can really impress the user. They use artificial intelligence to understand most relevant questions. Machine learning lets them build up a context of what the user is talking about. The user can say “it” instead of repeating the name of an item every time. At their best, they’re almost like talking with a service representative with a perfect memory.

Where Chatbots Are Found

Any conversational platform can support chatbots in principle. Even plain SMS messaging can do the job. Some are better suited than others, offering features beyond text. It’s important to check their terms of service to make sure bots are allowed and that they follow the requirements.

Any conversational platform can support chatbots in principle. Even plain SMS messaging can do the job. Some are better suited than others, offering features beyond text. It’s important to check their terms of service to make sure bots are allowed and that they follow the requirements.

Slack encourages bots, A “bot,” in its terminology, is an app for conversational interaction. Once workspaces have an installed bot, users can invoke it by name. Many bots are available for customization. Alternatively, developers can create their own.

Recently, a customer of ours wanted an app that would let their customers upload a list of inventory SKUs and get back metadata about them. The metadata had to be formatted in a way online retailers could use. We found the best way to do it was to build a Slack bot. The user interface requirements were simple and text-based.

Invoking the bot gives a short explanation of how to use it. After uploading an inventory list, the bot keeps the user informed of the processing status. When it’s done, it returns a spreadsheet suitable for giving to a customer. The environment is familiar to Slack users, and using it is straightforward.

How to Start Your Chatbot

Microsoft Teams has similar support for bots. A bot can converse with an individual, a selected group, or a whole team. Developers use the Bot Framework to create a bot that’s as simple or complex as required. Also, Webhooks can connect to web services to extend their capabilities.

Also, Facebook Messenger bots have access to a huge audience. Message templates give developers a quick start for creating bots with attractive images and interactive features. The wit.ai Bot Engine lets them set up natural-language conversations. The millions of people who regularly use Facebook Messenger will find that bots for the services they need fit naturally into their mobile experience.

The style and level of support for bots are different for each conversational platform. Therefore, developers who are willing to put everything on the backend and use just plain text can use anything, including SMS. Also, environments that provide support for bots make the development cycle quicker and allow more kinds of features.

The Advantages of a Chatbot

Online visitors can find chatbots everywhere. However, not every kind of interaction works as a chatbot. The approach is most effective when the user can make text queries or selections. It helps if a large amount of visual interaction isn’t necessary. These are some of the qualities that should make you think about a bot as a solution:

- The interaction is basically back and forth.

- People want quick answers to simple questions.

- The goal is to engage prospective customers and satisfy existing ones.

- The user base is familiar with a messaging platform that supports bots.

- The set of tasks to perform is reasonably limited and normally doesn’t require human interaction.

Above all else, chatbots should facilitate easy communication.

The Future of Chatbots

As technology advances, new and more sophisticated kinds of chatbots will appear on the market. Also, natural language processing will improve, bringing bots closer to giving the impression of a human assistant. Today, most bots use a text interface, but voice recognition will play a growing role. People will literally be able to talk with them and get the answers they need. Siri and Alexa are simple examples compared to what the future will bring.

The future interface will likely combine voice, text, and visual elements. Sometimes users need the precision of a text interface. Sometimes seeing a graphic response is better than getting a spoken one. Therefore, the future chatbot will be a multimedia experience.

Do you have ideas for a user interface that will help your employees or customers? Perhaps a SlackBot or other chatbot is the ideal way to maintain contact with people or let them do their jobs better. Reach out to one of our experts. We’ll help you to build an app that will give you a unique advantage.

About Microsoft Silverlight

Silverlight is a Rich Internet Application framework developed by Microsoft. Like other RIA frameworks such as Adobe Flash, it enables software developers to create applications that have a consistent appearance and functionality across different web browsers and operating systems. Silverlight provides users with a rich experience, with built-in support for vector graphics, animation, and audio and video playback.

Although Silverlight is still a relatively new technology, it has already been used for live streaming of high-profile events such as the 2008 Beijing Olympics and the 2009 Presidential Inauguration. It is also being used by major sports organizations to broadcast live content.

AWS Lambda and Virtual Machines | Use Cases and Pricing

This article compares AWS Lambda and virtual machines, discussing when to use each and digging into pricing.

A virtual machine isn’t the only way to get computing power on AWS, and it isn’t always the most cost-effective. Sometimes you just need to set up a service that will perform a task on demand, and you don’t care about the file system or runtime environment. For cases like these. AWS Lambda may well be the better choice.

Amazon makes serious use of Lambda for internal purposes. It’s the preferred way to create “skills,” extended capabilities for its Alexa voice assistant. The range of potential uses is huge.

AWS Lambda and virtual machines both exist on a spectrum of abstraction wherein you take on less and less of the responsibility for managing and patching the thing running your code. For this reason, Lambda is usually the better bet when your use case is a good fit.

What is AWS Lambda

Note: For a full analysis breaking down what AWS Lambda is with pricing examples, see our earlier post, “What is AWS Lambda – and Why You’re About to Become a Huge Fan“.

Lambda is a “serverless” service. It runs on a server, of course, like anything else on AWS. The “serverless” part means that you don’t see the server and don’t need to manage it. What you see are functions that will run when invoked.

You pay only per invocation. If there are no calls to the service for a day or a week, you pay nothing. There’s a generous zero-cost tier. How much each invocation costs depends on the amount of computing time and memory it uses.

The service scales automatically. If you make a burst of calls, each one runs separately from the others. Lambda is stateless; it doesn’t remember anything from one invocation to the next. It can call stateful services if necessary, such as Amazon S3 for storing and retrieving data. These services carry their own costs as usual.

Lambda supports programming in Node.js, Java, Go, C#, and Python.

Comparison with EC2 instances

When you need access to a virtual machine, Amazon EC2 offers several ways to obtain one. It has three ways to set up an instance which is a VM.

On-demand instances charge per second or per hour of usage, and there’s no cost when they’re inactive. The difference from Lambda is that the instance is a full computing environment. An application running on it can read and write local files, invoke services on the same machine, and maintain the state of a process. It has an IP address and can make network services available.

Reserved instances belong to the customer for a period of time, and billing is for the usage period. They’re suitable for running ongoing processes or handling nearly continuous workloads.

Spot instances are discounted services which run when there is spare capacity available. They can be interrupted if AWS needs the capacity and will pick up later from where they left off. This approach has something in common with Lambda, in that it’s used intermittently and charges only for usage, but it’s still a full VM, with all the abilities that imply. Unlike Lambda, it’s not suitable for anything that needs real-time attention; it could be minutes or longer before a spot instance can run.

Use cases for Lambda

Making the right choice between AWS Lambda and virtual machines means considering your needs and making sure the use case matches the approach.

The best uses for Lambda are ones where you need “black box” functionality. You can read and write a database or invoke a remote service, but you don’t need any persistent local state for the operation. Parameters can provide a state for each invocation. Cases which this functionality could be good for include:

- Complex numeric calculations, such as statistical analysis or multidimensional transformations

- Heavy-duty encryption and decryption

- Conversion of a file from one format to another

- Generating thumbnail images

- Performing bulk transformations on data

- Generating analytics

Invoking a Lambda service is called “triggering.” This can mean calling a function directly, setting up an event which makes it run, or running on a schedule. With the Amazon API Gateway, it’s even possible to respond to HTTP requests.

AWS Step Functions, which are part of the AWS Serverless Platform, enhance what Lambda can do. They let a developer define an application as a series of steps, each of which can trigger a Lambda function. Step Functions implement a state machine, providing a way to get around Lambda’s statelessness. Applications can handle errors and perform retries. It’s not the full capability of a programming language, but this approach is suitable for many kinds of workflow automation.

AWS Lambda and Virtual Machines | Comparing costs

Check out Amazon’s pricing calculators for full details on Lambda pricing and EC2 pricing.

Like other factors when comparing AWS Lambda and Virtual Machines, Lambda wins out on cost if your use case supports using it.

Lambda wins on cost when it’s employed for a suitable use case and when the amount of usage is relatively low. “Relatively low” leaves a lot of headroom. The first million requests per month, up to 400,000 GB-seconds, are free. Customers that don’t need more than that can use the free tier with no expiration date. If they use more, the cost at Amazon’s standard rates is $0.0000002 per request — that’s just 20 micro cents! — plus $0.00001667 per GB-second.

The lowest on-demand price for an EC2 instance is $0.0058 per hour. By simple division, neglecting the GB-second cost, a Lambda service can be triggered up to 29,000 times per hour and be more cost-effective.

Many factors come into play, of course. If each request involves a lot of processing, the costs will go up. A compute-heavy service on EC2 could require a more powerful instance, so the cost will be higher either way.

Some needs aren’t suitable for a Lambda environment. A business that needs detailed control over the runtime system will want to stay with a VM. Some cases can be managed with Lambda but will require external services at additional cost. When using a virtual machine, everything might be doable without paying for other AWS services.

The benefits of simplicity

When it comes to AWS Lambda and virtual machines, it comes down to using the simpler method as long as it meets your needs. If a serverless service is all that’s needed, then the simplicity of managing it offers many benefits beyond the monthly bill. There’s nothing to patch except your own code, and the automatic scaling feature means you don’t have to worry about whether you have enough processing power. It isn’t necessary to set up and maintain an SSL certificate. That frees up IT people to focus their attention elsewhere.

With the Lambda service, Amazon takes care of all security issues except for the customer’s own code. This can mean a safer environment with very little effort. It’s necessary to limit access to authorized users and to protect those accounts, but the larger runtime environment is invisible to the customer. Amazon puts serious effort into defending its servers, making sure all vulnerabilities are promptly fixed.

With low cost, simple operation, and built-in scalability, Lambda is an effective way to host many kinds of services on AWS.

The Entrance ‘What, Why?’ Series: React Edition

Welcome to the ‘What, Why?’ series hosted by Entrance. The goal of this series is to take different technologies and examine them from a technical perspective to give our readers a better understanding of what they have to offer. The web is filled with tons of resources on just about anything you could imagine, so it can be really challenging to filter out all of the noise that can get in the way of your understanding. This series focuses on the two biggest cornerstones of understanding new tools: What is it, and why use it? So, without further ado, let’s kick off this first edition with something you’ve probably heard of: React!

React, quite simply, is getting big. It’s one of the most starred JavaScript Libraries on GitHub, so it goes without saying that it’s garnered lots of followers, as well as fanboys. React was originally developed by Facebook for internal use and many will be quick to point out that instagram.com is completely written with React. Now, it’s open source and backed by not just Facebook, but thousands of developers worldwide and considered by many to be the ‘future of web development’. React is still new-ish, but has had time to mature. So, what is it?

What is React?

A JavaScript Library

First and Foremost: React is a JavaScript Library. Even if some call it a framework, it is NOT. It is ONLY a view layer. What is the purpose of the library? To build User Interfaces. React presents a declarative, component-based approach to UIs, aimed at making interfaces that are Interactive, stateful, and reusable. It revolves around designing your UI as a collection of components that will render based on their respective states.

Paired with XML Syntax

React is also often paired with JSX or JavaScript XML. JSX adds XML syntax to JavaScript, but is NOT necessary in order to use React. You can use React without JSX, but having it would definitely help make things more clean and elegant.

‘Magical’ Virtual DOM

A distinguishing aspect of React is that uses something magical called ‘The Virtual DOM’. Conceptually, virtual DOM is like a clone of the real DOM. Let’s make the analogy of your DOM being a fancy schmancy new sports car of your choice. Your virtual DOM is a clone of this car. Now, let’s say you want to trick out your sweet new ride with some killer spinny-rims, wicked flames decals on the sides and, the classical cherry-on-top, a pair of fuzzy dice hanging from the rear-view mirror. When you apply these changes, React runs a diffing algorithm that essentially identifies what has changed from the actual DOM (the car) in your virtual DOM (your now-tricked-out clone). Next, it reconciles the differences in the Real DOM with the results of the diff. So, really, instead of taking your car and completely rebuilding it from scratch, it only changes the rims, sides, and rear-view mirror!

Why React?

Component Modularity

Now that we’ve got a reasonable understanding of what React is, we can ask: Why use it? One of the biggest pluses to React is the modularity caused by its paradigm. Your UI will be split into customizable components that are self-contained and easily reused across multiple projects; something everyone can appreciate. Not only that, but components make testing and debugging less of a hassle as well.

Popular Support

The popularity of React also plays to its advantage. Being fully supported by Facebook AND thousands of developers means there will be plenty of resources around for your perusing, and tons of knowledgeable, friendly folks eager to help you if you get stuck along the way. That also means there’s plenty of people working towards constantly improving the React library!

JSX + Virtual DOM

JSX is a nifty little plus in that, while it’s not required, you are able to use it, making the writing and maintaining of your components even more straightforward and easy.

Great Performance

Topping off our list of Pros, we’ve got the Virtual DOM. It opens up the capability of server side rendering, meaning we can take that DOM clone, render it on our server, and serve up some fresh server-side React views. Thanks to Reacts Virtual DOM, pages are quicker and more efficient. The performance gains are quite real when you are able to greatly reduce the amount of costly DOM operations.

Why Not React?

As great as anything is, it can be just as important to know why you SHOULDN’T use it. Nothing is the perfect tool for absolutely everything, so why wouldn’t you use React? Well…

Don’t Use React Where it’s Not Needed

React shines when you’ve got a dynamic and responsive web content to build. If your project won’t be including any of that, React might just not be needed, and could result in you adding a lot of unnecessary code. Another downer is that, if you did opt to avoid pairing JSX in with your react components, your project can get kind of messy and harder to follow.

Understand the React Toolchain

Some people don’t realize that React only represents the View. That means you’ll have to be bringing in other technologies in order to get something fully functioning off the ground. Developers typically recommend using Redux with React, as well as Babel, Webpack, NPM… so if you’re not already familiar with the common accompaniments of React, you could end up biting off a bit more that you want to chew.

So in the end, should you use React? Is the hype train worth jumping on? Well, that’s completely dependent on your project and the constraints involved. It’s something that you and your team will have to decide together. Hopefully after reading this article, you are able to recognize what React is, as well as why you should keep in in mind for the future. If you’re interested in a deeper dive into the subjects discussed here, check out the React site! We hope you were able to take away some good info from this edition of ‘What, Why?’, and if you’re looking for more, be on the lookout for our upcoming post on React-Native!

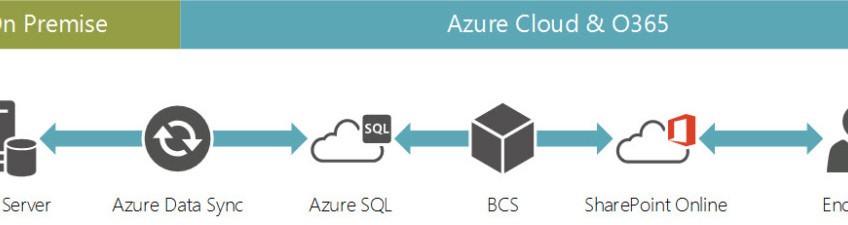

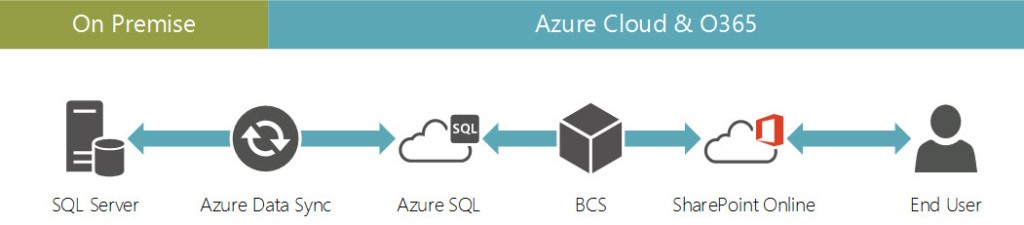

Office 365 + SharePoint + Azure Data Sync = Easy Access to Line of Business Data

One of the first questions asked by potential adopters of Microsoft Office 365 is:

“How easy is it to access our critical line of business data stored on premise from SharePoint in the cloud?”

Unfortunately, with the initial versions of SharePoint Online, there really wasn’t a great answer. Transforming external data into the metadata required custom development. This alone deterred many organizations from making the move to the cloud or a hybrid cloud solution. Thankfully, as Microsoft evolves its’ cloud platform Azure, the O365 community gets some cool new toys to play with; specifically, Azure SQL, Azure Data Sync and Business Connectivity Services (BCS) which specifically addresses this data access challenge.

For those of you unfamiliar with Azure SQL, it is a hosted service of Microsoft’s SQL Server. Pricing is per hour and you pay for storage and backups, but not the underlying virtual machine or operating system like you would with a standard SQL Server virtual machine. Azure SQL dramatically reduces footprint and maintenance costs, but these cost benefits do come with some limitations that I’ll address in a future blog.

Azure Data Sync allows you to synchronize data and schema between SQL Server and Azure SQL without any custom development. While it also has some limitations and requires one to follow a series of configuration steps, it works well considering it is still in “Preview”.

In most cases, a SQL View on existing tables can be used to work around a restriction of either Azure SQL or Data Sync.

Much like Azure SQL, the O365 version of SharePoint has some limitations compared to its’ on premise counterpart, but it also includes some unique tools such as Business Connectivity Services (BCS). BCS allows you to create External Content Types and External Data Columns which function like a standard Lookup Column, but are backed by a SQL database instead of a SharePoint List.

Though, as you might have guessed, the current version of BCS cannot communicate with on premise SQL Servers. It can however, connect to Azure SQL instances.

Putting all the pieces together, Azure Data Sync moves data from on premise to an Azure SQL instance to which BCS can connect to and expose the data via External Content Types Simple, clean and no custom development required.

The visualization below illustrates how these systems can be integrated.

As Microsoft continues to develop these services and releasing new tools, the differences between on premise SharePoint and O365 will continue to dwindle. Likewise, the argument against migrating to O365 will be much harder to support, especially now that you can easily connect to your line of business data.

There Will Be Bugs! | Application Development Blog Series

Best practices for minimizing the impact and mitigating costs associated with fixing software bugs

First of all, let’s clear something up: what is a bug? A bug is a flaw in software that results in the application not functioning as intended, and is something that is theoretically preventable.

How custom software ages | Application Development Video Series, Episode 1

We don’t typically think of software as something that can age. The code is written and run on a computer, so it doesn’t age like hardware does or like people do. It becomes obsolete or becomes less functional, unable to keep up with the demands of the business and the business users. Software is usually written based on a snapshot of a business need for a certain period or range of time. Unfortunately, software doesn’t change dynamically the way the business does. Businesses can change, market demands can change, the number of users can change, and the software can have trouble keeping up with that.Read More

Toughbooks, Field Data Capture, and Disaster Recovery

In January, Entrance hosted a lunch and learn, “Field Data Capture for Oil and Gas Service Companies.” I covered some of the key considerations that companies out in field should evaluate before implementation.

During the Q&A portion, one of the big topics of conversation was around toughbooks. Field work is characteristically rough, so choosing and maintaining the right devices can be difficult. Read More

Data Management for Oil & Gas: High Performance Computing

Data Management and Technology

The oil and gas industry is dealing with data management on a scale never seen before. One approach to quickly get at relevant data is with High Performance Computing (HPC).

HPC is dedicated to the analysis and display of very large amounts of data that needs to be processed rapidly for best use.

One application is the analysis of technical plays with complex folding. In order to understand the subsurface, three dimensional high definition images are required.

The effective use of HPC in unconventional oil and gas extraction is helping drive the frenetic pace of investment, growth and development that will provide international fuel reserves for the next 50 years. Oil and gas software supported by data intelligence drives productive unconventional operations. Read More

Keys to Business Intelligence

Five key insights from business intelligence expert David Loshin

In a recent interview, David Loshin, president of business intelligence consultancy Knowledge Integrity, Inc.,

named five key things organizations can do to promote business intelligence success: Read More

Bad Oil and Gas Software: Key Concerns

Oil and gas software is an essential component for businesses in the energy industry. It allows them respond to problems more quickly, review historical data more easily and send reports to managers automatically.

Oil and gas software also allows enables the implementation of a formal process for tracking production as opposed to the collection of spreadsheets that has traditionally been used in this industry.

However, poor oil and gas software can also create problems, which may be classified into the areas of assets, production and revenue. Read More

Custom Software: DIY Advantages and Disadvantages

Good Planning a Key Differentiator for Custom Software

Custom software can be a great tool to match processes to your business. The recent proliferation of do-it-yourself tools makes this even easier because they allow people who aren’t professional programmers to create their own software. Read More

Celebrating Ten Years of Great Custom Software

A Custom Software Extravaganza

The Entrance team celebrated a decade of oil and gas custom software at the Petroleum Club on December 16th. A group of 200 honored guest and Entrance team members gathered together over drinks, dancing, and delicious food.

The menu for the event included lobster bisque, mashed potato martini’s, prime rib, and a make-your-own pasta bar. For desert, guests chose from a selection of mini desserts and a ten year anniversary cake.

Far from being your usual cocktail event, the party also featured a photo booth and a range of video games. Choices ran the board from old school pinball to the latest video game systems.

Far from being your usual cocktail event, the party also featured a photo booth and a range of video games. Choices ran the board from old school pinball to the latest video game systems.

After guests had their fill of food and fun, Houston band Molly and the Ringwalds took the stage for a rocking 80’s performance. To make things even more fun, party goers received props like wigs, boom boxes, neon gloves, and more.

Entrance rounded out the night by handing out a copy of the Book of Entrance. This pocket sized book commemorates ten years of smart + fun with recipes, photos, interviews, and the coveted rules to Volley Pong.

For pictures of the custom software team and their guests, check out the Facebook album!

Entrance Custom Software Consultants See Doubled Growth in 2013

A Growing Team of Custom Software Consultants

2013 was a landmark year for the Entrance custom software team. Some of the business accomplishments included:

- Doubling headcount

- Increasing revenue by 50%

- Increasing work capacity by 50%

- Addition of several high profile Upstream clients

- Grew the already substantial footprint of oil and gas service company clients

A Bigger Office to Match

As the Entrance team has grown, so too has the need for office space. As a result, the footprint of the business has nearly doubled as well.Many new consultants have taken their place in what the team calls the bullpen.

Desks and cube walls can be moved around at will in this new space. This is meant to enable project teams to work together flexibly and collaboratively.

Most employees can agree that the best part of the phase two expansion is the addition of the Fishbowl. The Fishbowl features a ping-pong table, shuffle board, golf practice areas, and a soda machine, with more fun additions to come.

Company growth is projected to continue at similar rates. As a result, planning has already begun for phase three of the office expansion!

Great Managers for Great Teams

A growing company also demonstrates the need for more managers. In the past six months, Entrance has hired a new HR manager, a sales manager, and four new project managers.

As a result, the team can now benefit from several new skill-sets, like energy warehousing.

The need to keep employees while aggressively hiring new ones has also led to an expansion in fringe benefits. Entrance team members now enjoy a fitness room, locker room, fully stocked smoothie station, gym memberships, and healthy snack options.

Strategy to Lead the Way Forward

While every successful company relies on a little luck, a good CEO will tell you that the strategy behind explosive growth has to be sound. As such, the Entrance management team rolled out a new three year strategic plan in the second half of 2013.

In addition, the team has also developed a new 25 year vision for Entrance to become the world authority on software for the energy industry.

As Entrance closes the chapter on its first decade, the entire company looks towards even bigger and better things in the next ten years.

Said Entrance president Nate Richards, ” It’s exciting to celebrate a decade of successful business! Our success so far really feels like the beginning of the next chapter.”

For more on the growing Entrance team, read this post about quarterly goal celebrations!

Custom Software: Four Moments of Truth

Moments of Truth for Custom Software

During a recent leadership conference the Entrance team began brainstorming how to make our custom software consulting even better. The leadership team has since started an active conversation among our consultant team on this topic.

One of the main points the speaker made was that every business has moments of truth that make all the  difference. For a restaurant, great food and service can be destroyed by a dirty floor or cockroaches. For a clothing store, the most stylish dresses can’t be outweighed by long lines and unfriendly clerks.

difference. For a restaurant, great food and service can be destroyed by a dirty floor or cockroaches. For a clothing store, the most stylish dresses can’t be outweighed by long lines and unfriendly clerks.

One of the Entrance values is “Improve everything,” or as some of us say, “Suck less every day.” As apotential client you may be wondering how we live out this value.

We see moments of truth as one huge opportunity to bring this value front and center. The below is directly from the Entrance custom software team themselves. We see this list as just a few of the places that we strive to improve the quality of our work every day!

Four Moments of Truth in Software

- Any sprint demo

This is the first chance that clients have to see how the Agile methodology works. This isn’t just about selling an idea. It has to meet our client’s needs and efficiently deliver software that works.

- Fixing custom software bugs

Every custom software application gets bugs once in a while. A good development team will identify the problem and fix it as quickly as possible. It’s just not acceptable to say a bug is fixed if it isn’t.

- Owning mistakes

By the same token, every team makes mistakes. It’s how that team owns up to them and makes it better that defines this moment of truth.

For one client, the developer communicated to the client about his mistake. He then quickly fixed it. As a result, the client appreciated his work even more than they would have if there had been no mistake at all!

- Requirements sign-off

This is one of those steps near the end of the custom software process that can make all the difference in terms of satisfaction. The development team and the client sit down to review what was promised and what has been delivered.

This can help bring to the surface any gaps in the final deliverable. If any are discovered, the team can develop a plan for making it right.

Improve Everything with Custom Software

Improving everything is a value that the Entrance team must live out every day. In addition, all of these moments of truth involve a degree of transparency.

As a client, it’s your job clear about what you need and to stay engaged through the process. The result of transparency on Entrance’s side is that you always know where your project is and how we’re delivering on your business need.

For more on quality custom software check out our Agile series, “Getting the Most for Your Money.”

Business Intelligence Deployment Misconceptions

Deploying Business Intelligence

Business intelligence, also commonly referred to as BI throughout the industry, is a piece of technology that allows a business to obtain actionable information that they can then use throughout their day-to-day operations. While business intelligence solutions certainly have their fair share of advantages, it is also important to realize that they are not the be-all, end-all solution for guidance that many people think they are.

There are certain business intelligence deployment misconceptions that businesses make over and over again to their detriment. Understanding these misconceptions will allow you to successfully avoid them and use BI to its fullest potential.

The Benefits of Business Intelligence

- The information provided is accurate, fast and, most importantly, visible to aid with making critical decisions relating to the growth of a business, as well as its movement.

- Business intelligence can allow for automated report delivery using pre-calculated metrics.

- Data can be delivered using real-time solutions that increase their accuracy and reduce the overall risk to the business owner.

- The burden on business managers to consolidate information assets can be greatly reduced through the additional delivery and organizational benefits inherent in the proper implementation of business intelligence solutions.

- The return on investment for organizations with regard to business intelligence is far-reaching and significant.

Business Intelligence Deployment Misconceptions

One of the most prevalent misconceptions about business intelligence deployment is the idea that the systems are fully automated right out of the box. While it is true that the return on investment for such systems can be quite significant, that is only true if the systems have been designed, managed and deployed properly.

One of the most prevalent misconceptions about business intelligence deployment is the idea that the systems are fully automated right out of the box. While it is true that the return on investment for such systems can be quite significant, that is only true if the systems have been designed, managed and deployed properly.

A common misconception is that a single business intelligence tool is all a company needs to get the relevant information to guide themselves into the next phase of their operations. According to Rick Sherman, the founder of Athena IT Solutions, the average Fortune 1000 company implements no less than six different BI tools at any given time.

All of these systems are closely monitored, and the information provided by them is then used to guide the business through its operations. No single system will have the accuracy, speed or power to get the job done on its own.

Another widespread misconception is the idea that all companies are using business intelligence in the present term and your company has all the information it needs in order to stay competitive. In reality, only about 25 percent of all business users have been reported as using BI technology in the past few years. The 25% number is actually a plateau – growth has been stagnant for some time.

One unfortunate misconception involves the idea that “self-service” business intelligence systems indicate that you only need to give users access to the available data to achieve success. In reality, self-service tools often need additional support than what most people plan for.

This support is also required on a continuing basis in order to prevent the systems from returning data that is both incomplete and inconsistent.

One surprising misconception about the deployment of business intelligence is that BI systems have completely replaced the spreadsheet as the ideal tool for analysis. In truth, many experts agree that spreadsheets are and will continue to be the only pervasive business intelligence tool for quite some time.

Spreadsheets, when used to track the right data and perform the proper analysis, have uses that shouldn’t be overlooked. Additionally, business users that find BI deployment too daunting or unwieldy will likely return to spreadsheets for all of their analysis needs.

According to the website Max Metrics, another common misconception is that business intelligence is a tool that is only to be used for basic reporting purposes.

In reality, BI can help business users identify customer behaviors and trends, locate areas of success that may have previously been overlooked and find new ways to effectively target a core audience. BI is a great deal more than just a simple collection of stats and numbers.

For more on this topic, check out our series, “What is business intelligence?“

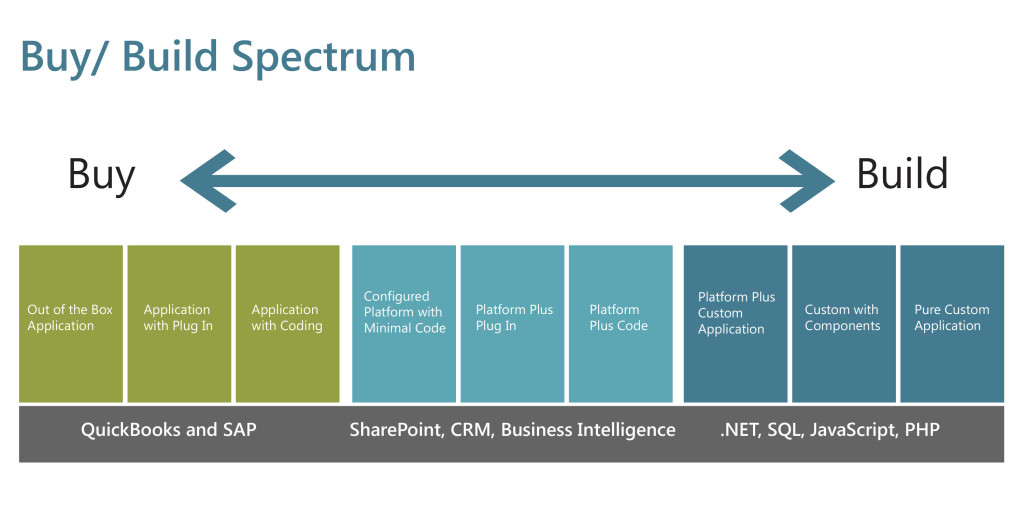

Software Selection: Assessing Business Drivers

Software Selection and Your Business

Making good choices during the software selection process is critical to your business. Whether you go with an off-the-shelf product, a custom application, or something in between, the investment in time and resources means that your company will be using that software for years to come.

We started a series on the topic of software selection that will lead you through some of the key concerns around this process. Check out part one on the buy versus build spectrum here. For illustrative purposes, check out the below infographic, which can help your team understand the range of available options when it comes to software selection.

Keeping Up With Evolving Software Capabilities

Periodic reassessment of the IT solutions serving your core business needs should also be an integral part of your systems lifecycle management. We all tend to focus on our daily operations and making best use of the tools at hand, but it’s prudent to regularly research available software applications that may enable your company to work more efficiently and at less cost.

Are you using an off-the-shelf application for your major operational needs, but employing custom designed software for specialized applications? IT providers are constantly upgrading and expanding the range and depth of their software and software services. Revisiting your current applications in light of newly evolved products and services may lead you to more efficient and less expensive software solutions.

Evaluating Off the Shelf Options

When analyzing how to best meet your IT needs, out-of-the box products should be examined first, as they’re usually less expensive than customized solutions offering the same functionality, and require less deployment time. Is there a proven off-the-shelf application that fulfills your operational requirements, or can do so with optional available plugins or slight coding changes? If so, you should strongly consider it.

Classic examples of this are Oracle’s or SAP’s back office applications. In the arena of E&P operations, where production data must be quickly gathered, transmitted and assessed, Merrick System’s eVIN software is a premier off-the-shelf solution, easily integrated with upstream business and decision-making systems.

Less easily determined is how best to support key functions such as business intelligence, which derive their data from existing databases, but are supported by specialized applications structured to meet their firm’s unique needs. Some of the business intelligence applications critical to the Upstream industry, such as operational, supply chain and asset management, often employ sophisticated, integrated data management solutions available from experienced E&P software consulting firms.

Evaluating Custom Software Options

There will always be some key business functions requiring innovative customized solutions. Written in such languages as JavaScript, SQL or PHP, these applications derive their data from core databases or data warehouses, but are often built to meet the specialized requirements of senior management.

On line analytical tools (OLAP) are an example of such customized applications. Created by software consultants to help interactively analyze multidimensional data, OLAPs rely upon information drawn from a company’s data warehouse.

Although the processes and rules that drive your business may be inherently stable, the software applications that help you manage and plan are constantly evolving. IT firms and consultants are fast developing new products to reap the burgeoning potential they see in the booming E&P industry. Periodically reassessing their offerings in light of your business needs, budget and current applications takes some time, but can return large dividends.

For help with the software selection process, sign up for a software audit today! We can help you understand how well your current software integrates, and then make recommendations on how to choose new software that fits with your existing and evolving business needs.